RPC通信原理解析

HDFS、YARN、MapReduce三者之间调用通过RPC通信进行数据交互

1. 使用Hadoop的RPC简单实现

模拟RPC的客户端、服务端、通信协议三者如何工作的

- 创建RPC协议

java

public interface RPCProtocol {

long versionID = 123L;

void mkdirs(String s);

}- 创建RPC服务端

java

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.ipc.RPC;

import java.io.IOException;

public class NNServer implements RPCProtocol{

@Override

public void mkdirs(String path) {

System.out.println("服务器创建目录:" + path);

}

public static void main(String[] args) throws IOException {

RPC.Server server = new RPC.Builder(new Configuration())

.setBindAddress("localhost")

.setPort(9090)

.setProtocol(RPCProtocol.class)

.setInstance(new NNServer())

.build();

System.out.println("服务器开始工作");

server.start();

}

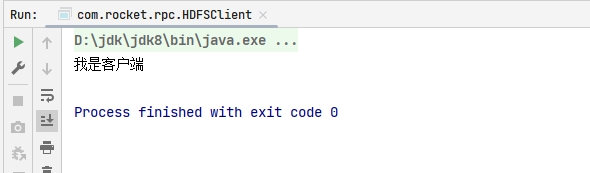

}- 创建RPC客户端

java

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.ipc.RPC;

import java.io.IOException;

import java.net.InetSocketAddress;

public class HDFSClient {

public static void main(String[] args) throws IOException {

com.rocket.rpc.RPCProtocol client = RPC.getProxy(RPCProtocol.class,

RPCProtocol.versionID,

new InetSocketAddress("localhost", 9090),

new Configuration());

System.out.println("我是客户端");

client.mkdirs("/input");

}

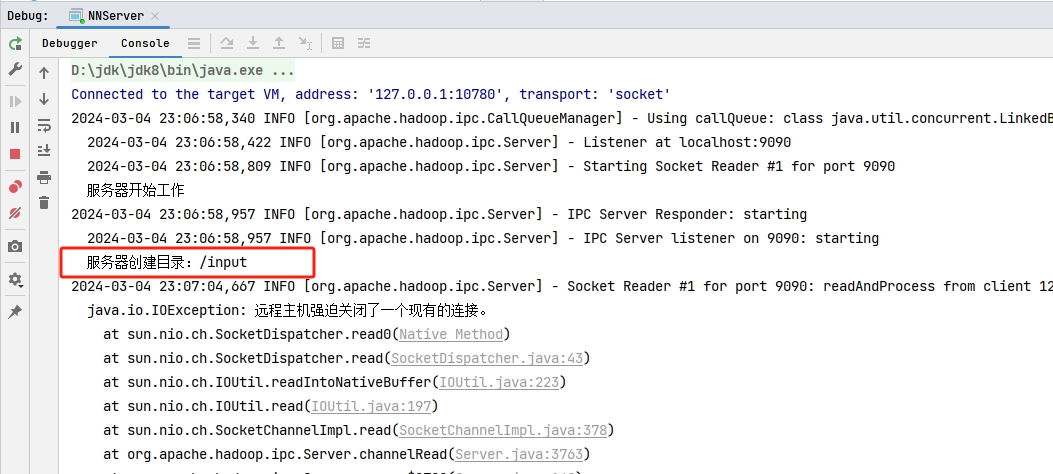

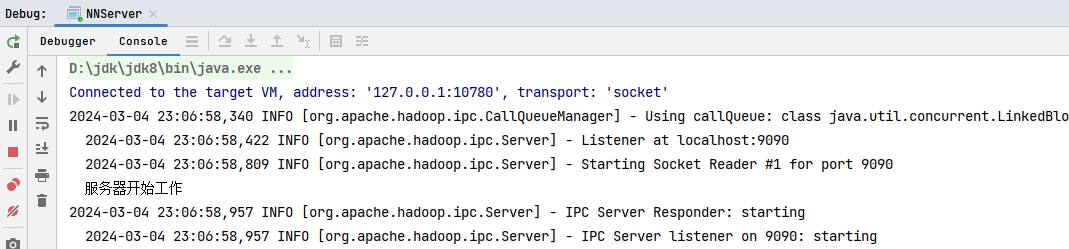

}- 运行测试 启动服务端NNServer 观察控制台打印:服务器开始工作 在控制台Terminal窗口输入,jps,查看到NNServer服务

cmd

C:\Users\mi>jps

13300 RemoteMavenServer36

17012 Jps

344 Launcher

15676 NNServer启动客户端HDFSClient  服务端控制台打印:创建路径/input

服务端控制台打印:创建路径/input