DataNode工作机制

1. DataNode工作流程图

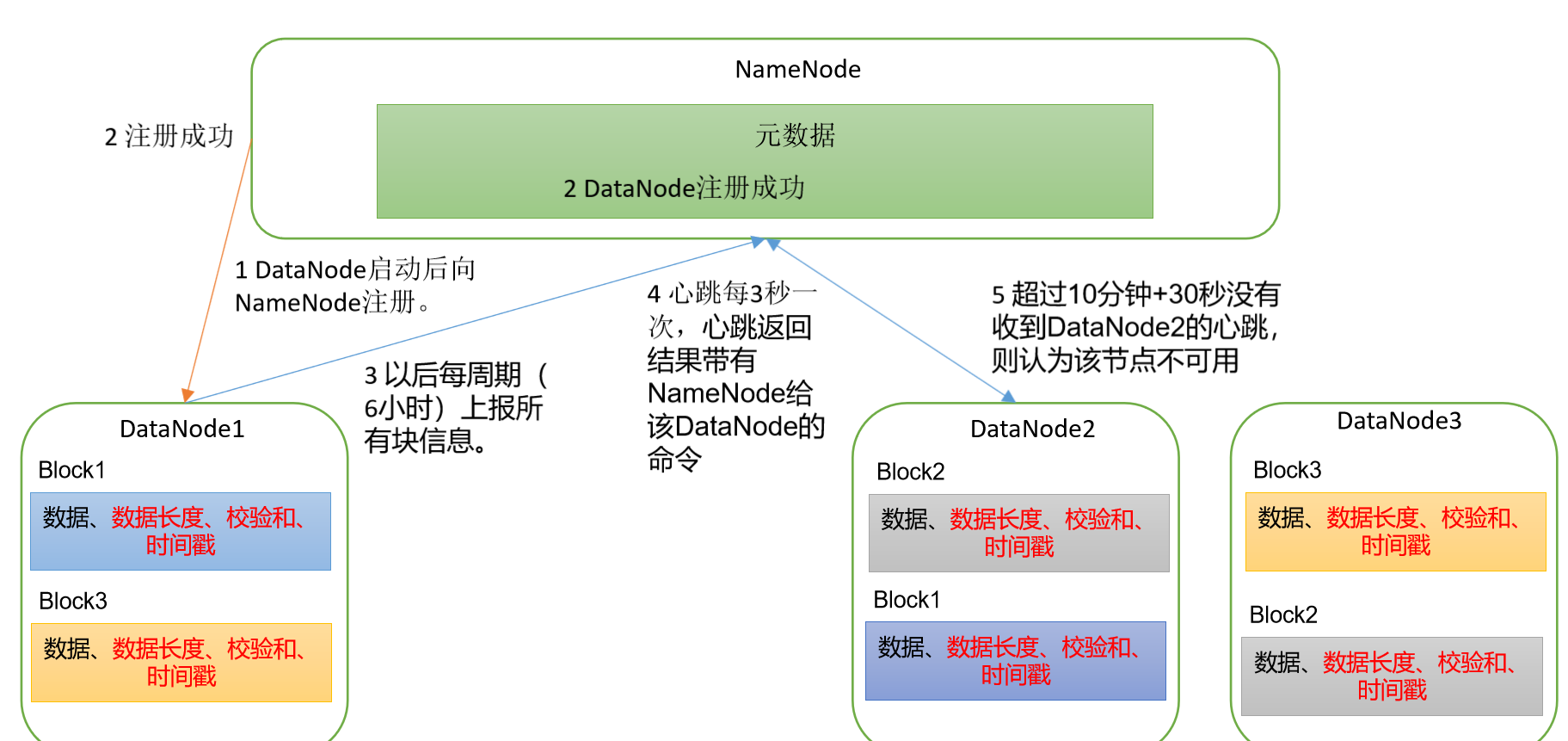

2. DataNode工作流程说明

(1)一个数据块在DataNode上以文件形式存储在磁盘上,包括两个文件,一个是数据本身,一个是元数据包括数据块的长度,块数据的校验和,以及时间戳。

(2)DataNode启动后向NameNode注册,通过后,周期性(6小时)的向NameNode上报所有的块信息。 DN向NN汇报当前解读信息的时间间隔,默认6小时;

xml

<property>

<name>dfs.blockreport.intervalMsec</name>

<value>21600000</value>

<description>Determines block reporting interval in milliseconds.</description>

</property>DN扫描自己节点块信息列表的时间,默认6小时

xml

<property>

<name>dfs.datanode.directoryscan.interval</name>

<value>21600s</value>

<description>Interval in seconds for Datanode to scan data directories and reconcile the difference between blocks in memory and on the disk.

Support multiple time unit suffix(case insensitive), as described

in dfs.heartbeat.interval.

</description>

</property>(3)心跳是每3秒一次,心跳返回结果带有NameNode给该DataNode的命令如复制块数据到另一台机器,或删除某个数据块。如果超过10分钟没有收到某个DataNode的心跳,则认为该节点不可用。 (4)集群运行中可以安全加入和退出一些机器。

3. 数据完整性保证

思考:如果电脑磁盘里面存储的数据是控制高铁信号灯的红灯信号(1)和绿灯信号(0),但是存储该数据的磁盘坏了,一直显示是绿灯,是否很危险?同理DataNode节点上的数据损坏了,却没有发现,是否也很危险,那么如何解决呢? 如下是DataNode节点保证数据完整性的方法。

(1)当DataNode读取Block的时候,它会计算CheckSum。

(2)如果计算后的CheckSum,与Block创建时值不一样,说明Block已经损坏。

(3)Client读取其他DataNode上的Block。

(4)常见的校验算法crc(32),md5(128),sha1(160)

(5)DataNode在其文件创建后周期验证CheckSum。

4. 掉线时限参数设置

提示

需要注意的是hdfs-site.xml 配置文件中的heartbeat.recheck.interval的单位为毫秒,dfs.heartbeat.interval的单位为秒。

xml

<property>

<name>dfs.namenode.heartbeat.recheck-interval</name>

<value>300000</value>

</property>

<property>

<name>dfs.heartbeat.interval</name>

<value>3</value>

</property>