HDFS-集群动态扩容和缩容

随着公司业务的增长,数据量越来越大,原有的数据节点的容量已经不能满足存储数据的需求,需要在原有集群基础上动态添加新的数据节点。

1. 环境准备

1.1 修改IP地址和主机名称

sh

[root@hadoop105 ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33

[root@hadoop105 ~]# vim /etc/hostname1.2 创建用户并提升权限

sh

[root@hadoop102 ~]# useradd jack

[root@hadoop100 ~]# vi /etc/sudoers1.3 拷贝hadoop102的/opt/module目录和/etc/profile.d

sh

[jack@hadoop102 ~]$ sudo mkdir /opt/module

[jack@hadoop102 ~]$ sudo mkdir /opt/software

[jack@hadoop105 ~]$ sudo chown jack:jack /opt/software

[jack@hadoop105 ~]$ sudo chown jack:jack /opt/module

[jack@hadoop102 opt]$ scp -r module/* jack@hadoop105:/opt/module/

[jack@hadoop102 opt]$ sudo scp /etc/profile.d/my_env.sh root@hadoop105:/etc/profile.d/my_env.sh

[jack@hadoop105 hadoop-3.3.6]$ source /etc/profile1.4 删除hadoop105上Hadoop的历史数据

sh

[jack@hadoop105 hadoop-3.3.6]$ rm -rf data/ logs/1.5 配置hadoop102和hadoop103到hadoop105的ssh无密登录

sh

[jack@hadoop102 .ssh]$ ssh-copy-id hadoop105

[jack@hadoop103 .ssh]$ ssh-copy-id hadoop1052. 启动新节点hadoop105

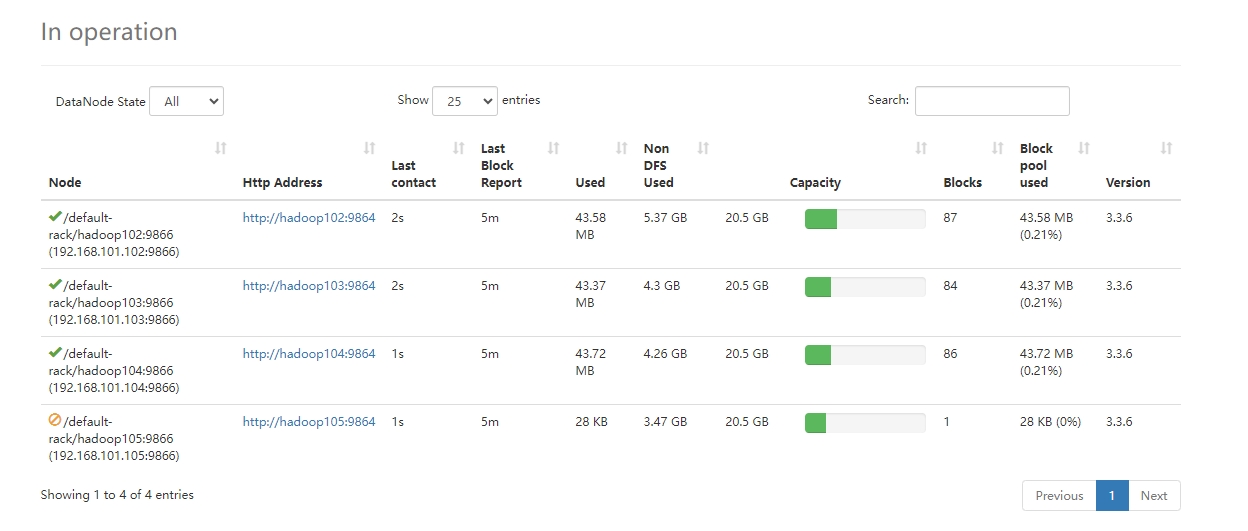

- 直接启动DataNode,即可关联到集群

sh

## 新加的机器肯定是DataNode而不是NameNode,想想为何?

[jack@hadoop105 hadoop-3.3.6]$ hdfs --daemon start datanode

WARNING: /opt/module/hadoop-3.3.6/logs does not exist. Creating.

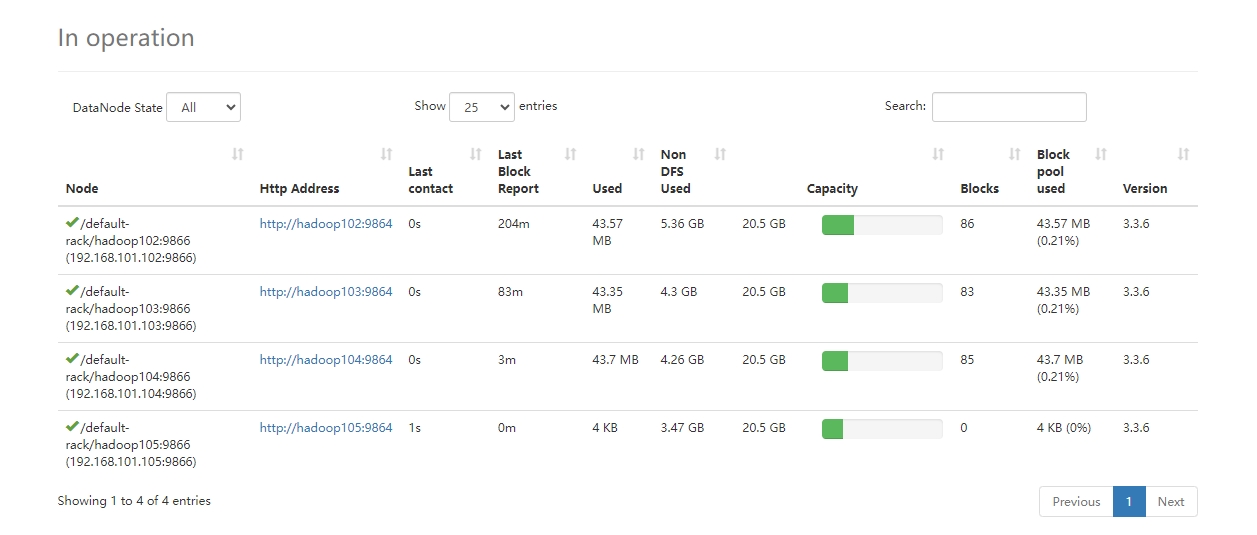

[jack@hadoop105 hadoop-3.3.6]$ yarn --daemon start nodemanager 2. 在hadoop105上上传文件

2. 在hadoop105上上传文件

sh

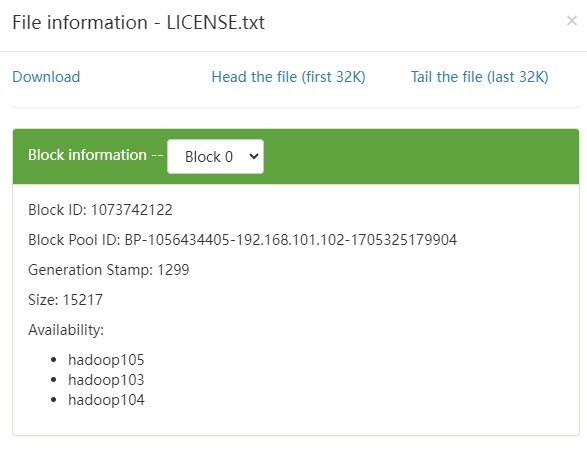

[jack@hadoop105 hadoop-3.3.6]$ hadoop fs -put /opt/module/hadoop-3.3.6/LICENSE.txt /

3. 服务器间数据均衡

在企业开发中,如果经常在hadoop102和hadoop104上提交任务,且副本数为2,由于数据本地性原则,就会导致hadoop102和hadoop104数据过多,hadoop103存储的数据量小。

另一种情况,就是新服役的服务器数据量比较少,需要执行集群均衡命令。

3.1 开启数据均衡命令

sh

[jack@hadoop105 hadoop-3.3.6]$ sbin/start-balancer.sh -threshold 10提示

对于参数10,代表的是集群中各个节点的磁盘空间利用率相差不超过10%,可根据实际情况进行调整。

3.2 停止数据均衡命令

sh

[jack@hadoop105 hadoop-3.3.6]$ sbin/stop-balancer.sh危险

由于HDFS需要启动单独的Rebalance Server来执行Rebalance操作,所以尽量不要在NameNode上执行start-balancer.sh,而是找一台比较空闲的机器。

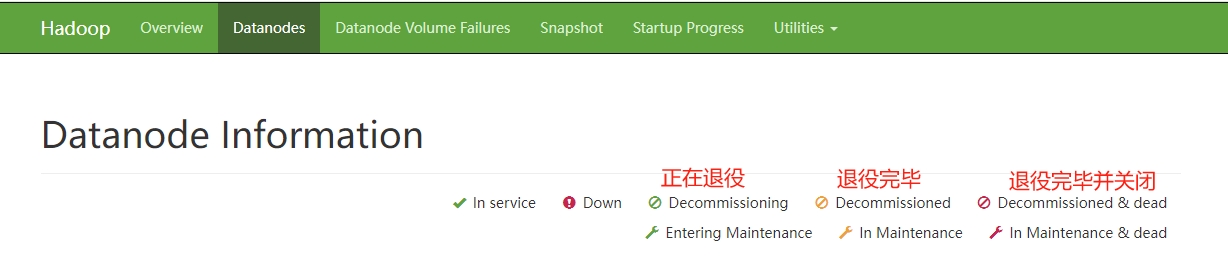

4. 退役服务器

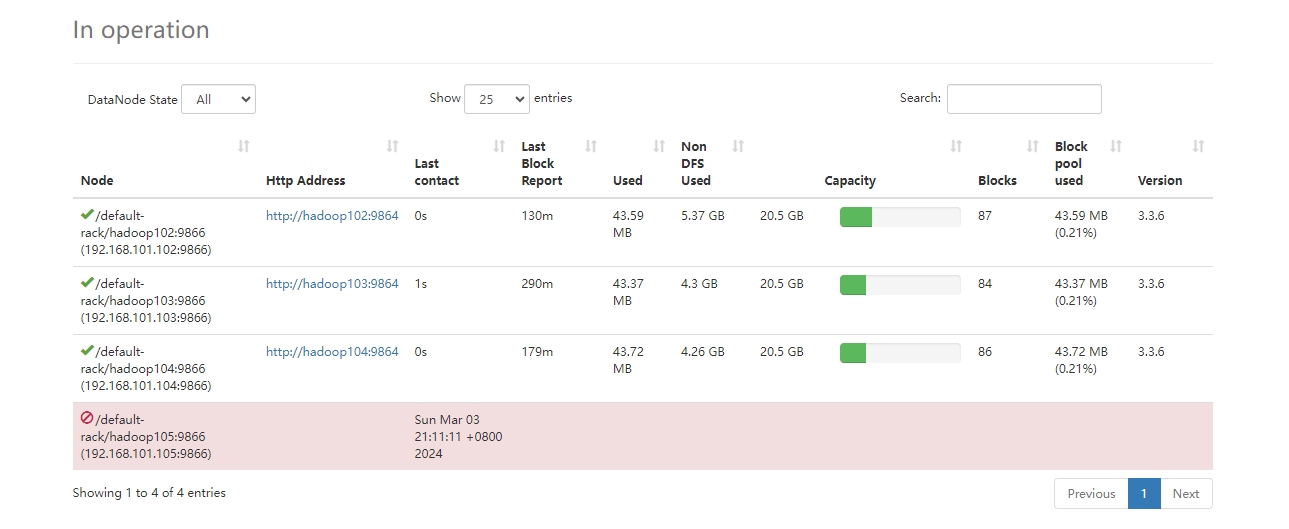

企业中:借助配置黑名单,用来退役服务器。

- 编辑/opt/module/hadoop-3.3.6/etc/hadoop目录下的blacklist文件

sh

[jack@hadoop102 hadoop] vim blacklist

hadoop105- 刷新NameNode信息

sh

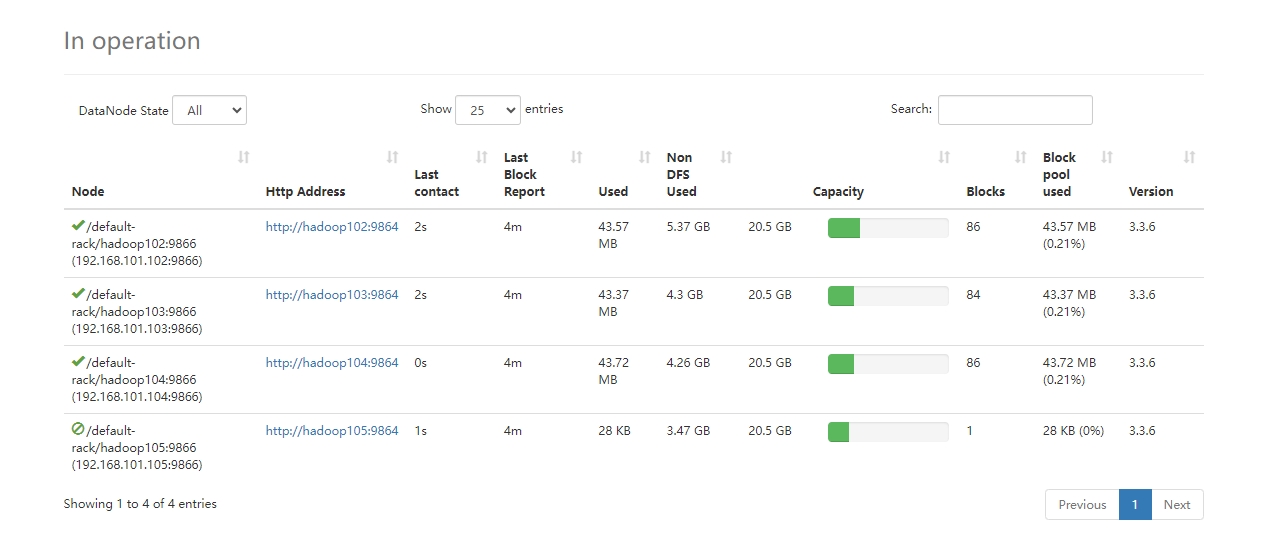

[jack@hadoop102 hadoop-3.3.6]$ hdfs dfsadmin -refreshNodes

Refresh nodes successful- 检查Web浏览器,退役节点的状态为decommission in progress(退役中),说明数据节点正在复制块到其他节点

- 等待退役节点状态为decommissioned(所有块已经复制完成),停止该节点及节点资源管理器。

sh

[jack@hadoop105 etc]$ hdfs --daemon stop datanode

[jack@hadoop105 etc]$ yarn --daemon stop nodemanager间隔10分钟+30秒后,NameNode将会把Hadoop105置为不可用状态:  5. 集群服务器数据的再平衡

5. 集群服务器数据的再平衡

sh

[jack@hadoop102 hadoop-3.3.6]$ sbin/start-balancer.sh -threshold 10