HDFS核心参数配置

1. NameNode内存生产配置

- Hadoop2.x系列,配置NameNode内存 NameNode内存默认2000m,在hadoop-env.sh文件中配置如下:

# NameNode内存配置3g

HADOOP_NAMENODE_OPTS=-Xmx3072m- Hadoop3.x系列,配置NameNode内存 Hadoop3.x默认是内存是系统动态分配的,可以通过查看hadoop-env.sh文件:

The maximum amount of heap to use (Java -Xmx). If no unit is provided, it will be converted to MB. Daemons will prefer any Xmx setting in their respective _OPT variable. There is no default; the JVM will autoscale based upon machine memory size.

export HADOOP_HEAPSIZE_MAX=The minimum amount of heap to use (Java -Xms). If no unit is provided, it will be converted to MB. Daemons will prefer any Xms setting in their respective _OPT variable. There is no default; the JVM will autoscale based upon machine memory size.

export HADOOP_HEAPSIZE_MIN=HADOOP_NAMENODE_OPTS=-Xmx102400m

- 查看目前NameNode占用内存

### 获取NameNode进程id

[jack@hadoop102 hadoop]$ jps

9953 DataNode

29689 Jps

9835 NameNode

10267 NodeManager

10428 JobHistoryServer

### 使用jmap命令查看NameNode内存

[jack@hadoop102 hadoop]$ jmap -heap 9835

Attaching to process ID 9835, please wait...

Debugger attached successfully.

Server compiler detected.

JVM version is 25.391-b13

using thread-local object allocation.

Mark Sweep Compact GC

Heap Configuration:

MinHeapFreeRatio = 40

MaxHeapFreeRatio = 70

MaxHeapSize = 255852544 (244.0MB)

NewSize = 5570560 (5.3125MB)

MaxNewSize = 85262336 (81.3125MB)

OldSize = 11206656 (10.6875MB)

NewRatio = 2

SurvivorRatio = 8

MetaspaceSize = 21807104 (20.796875MB)

CompressedClassSpaceSize = 1073741824 (1024.0MB)

MaxMetaspaceSize = 17592186044415 MB

G1HeapRegionSize = 0 (0.0MB)

### 使用jmap命令查看DataNode内存

[jack@hadoop102 hadoop]$ jmap -heap 9953

Attaching to process ID 9953, please wait...

Debugger attached successfully.

Server compiler detected.

JVM version is 25.391-b13

using thread-local object allocation.

Mark Sweep Compact GC

Heap Configuration:

MinHeapFreeRatio = 40

MaxHeapFreeRatio = 70

MaxHeapSize = 255852544 (244.0MB)

NewSize = 5570560 (5.3125MB)

MaxNewSize = 85262336 (81.3125MB)

OldSize = 11206656 (10.6875MB)

NewRatio = 2

SurvivorRatio = 8

MetaspaceSize = 21807104 (20.796875MB)

CompressedClassSpaceSize = 1073741824 (1024.0MB)

MaxMetaspaceSize = 17592186044415 MB

G1HeapRegionSize = 0 (0.0MB)可以看见MaxHeapSize都是244.0MB,也就是NameNode、DataNode占用内存最大是244.0MB,NameNode、DataNode的内存是自动分配的,且相等。不是很合理。

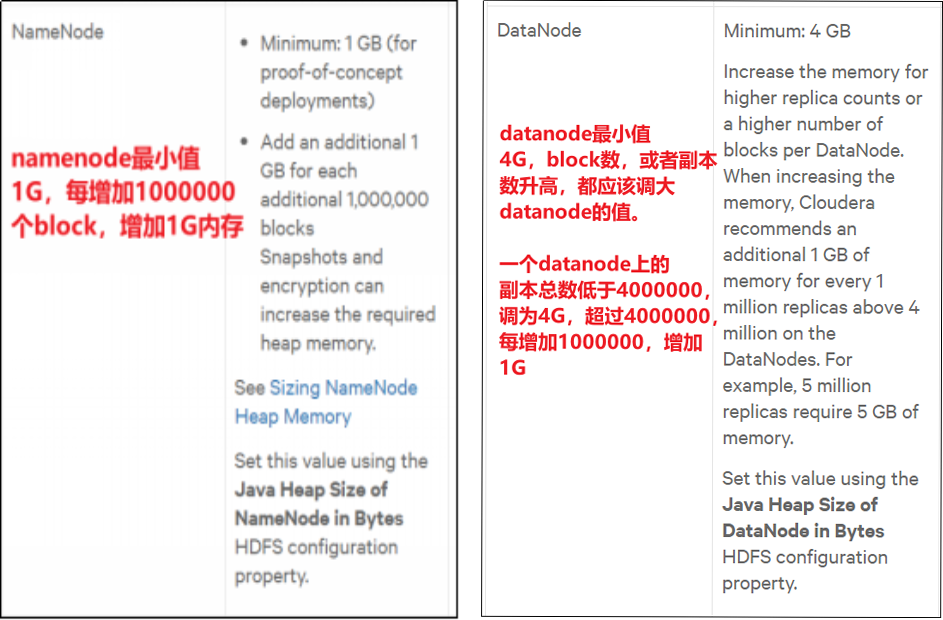

可以参考CDH官网的配置指导:https://docs.cloudera.com/documentation/enterprise/6/release-notes/topics/rg_hardware_requirements.html#concept_fzz_dq4_gbb, 其中对NameNode、DataNode如下图:  4. 修改hadoop-env.sh配置NameNode、DataNode内存

4. 修改hadoop-env.sh配置NameNode、DataNode内存

export HDFS_NAMENODE_OPTS="-Dhadoop.security.logger=INFO,RFAS -Xmx512m"

export HDFS_DATANODE_OPTS="-Dhadoop.security.logger=ERROR,RFAS -Xmx1024m"2. NameNode心跳并发配置

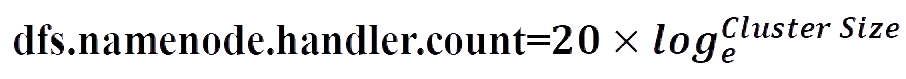

NameNode有一个工作线程池,用来处理不同DataNode的并发心跳以及客户端并发的元数据操作。对于大集群或者有大量客户端的集群来说,通常需要增大该参数。默认值是10。

2.1 计算心跳并发量

心跳并发数量有一个公式可以计算得出:

企业经验

比如集群规模(DataNode台数)为3台时,此参数设置为21。可通过简单的python代码计算该值:

比如集群规模(DataNode台数)为3台时,此参数设置为21。可通过简单的python代码计算该值:

[jack@hadoop102 hadoop]$ python

Python 2.7.5 (default, Nov 14 2023, 16:14:06)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-44)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import math

>>> print int(20*math.log(3))

21

>>> quit()2.2 配置hdfs-site.xml

<property>

<name>dfs.namenode.handler.count</name>

<value>21</value>

</property>3. 开启回收站

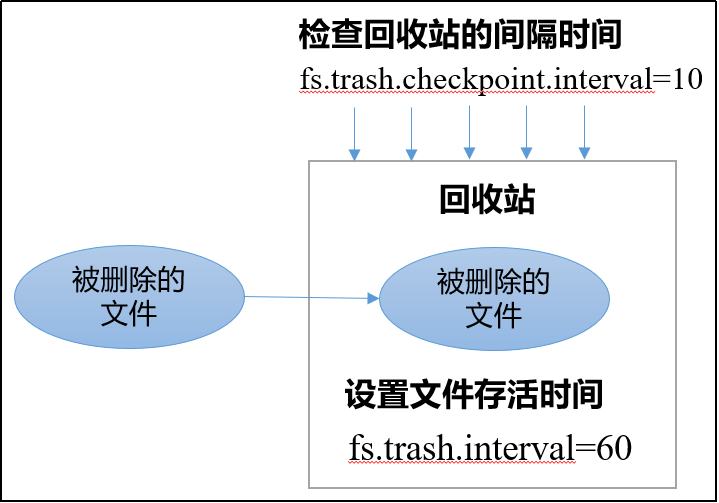

开启回收站功能,可以将删除的文件在不超时的情况下,恢复原数据,起到防止误删除、备份等作用。

3.1 回收站工作机制

回收站参数说明:

回收站参数说明:

- 默认值fs.trash.interval = 0,0表示禁用回收站;其他值表示设置文件的存活时间。

- 默认值fs.trash.checkpoint.interval = 0,检查回收站的间隔时间。如果该值为0,则该值设置和fs.trash.interval的参数值相等。

- 要求fs.trash.checkpoint.interval <= fs.trash.interval。

3.2 启用回收站

回收站目录在HDFS集群中的路径:/user/jack/.Trash/…

3.3 验证回收站

[jack@hadoop102 hadoop-3.3.6]$ hadoop fs -rm -r /user/jack/input

2021-07-14 16:13:42,643 INFO fs.TrashPolicyDefault: Moved: 'hdfs://hadoop102:9820/user/jack/input' to trash at: hdfs://hadoop102:9820/user/jack/.Trash/Current/user/jack/input3.4 恢复回收站数据

[jack@hadoop102 hadoop-3.3.6]$ hadoop fs -mv

/user/jack/.Trash/Current/user/jack/input /user/jack/input特别注意

- 通过程序删除的文件不会经过回收站, 需要调用moveToTrash()才进入回收站

Trash trash = New Trash(conf);

trash.moveToTrash(path);- 通过网页上直接删除的文件也不会走回收站。