CM6配置Kerberos

1. Kerberos安装

请参考Kerberos安装笔记

2. 启用Kerberos安全认证

2.1 为CM创建管理员主体/实例

[root@hadoop101 ~]# kadmin.local -q "addprinc cloudera-scm/admin"

Authenticating as principal root/admin@HADOOP.COM with password.

WARNING: no policy specified for cloudera-scm/admin @HADOOP.COM; defaulting to no policy

Enter password for principal " cloudera-scm/admin @HADOOP.COM": (输入密码)

Re-enter password for principal " cloudera-scm/admin @HADOOP.COM": (确认密码)

Principal " cloudera-scm/admin @HADOOP.COM" created.2.2 启用Kerberos

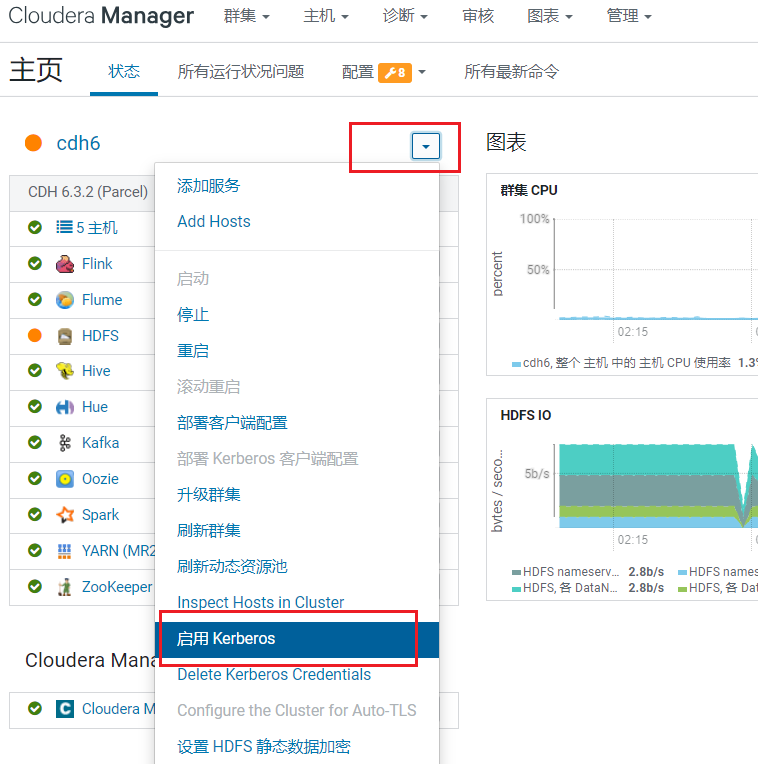

在首页点击启用Kerberos: 进入环境确认(勾选全部)

进入环境确认(勾选全部)

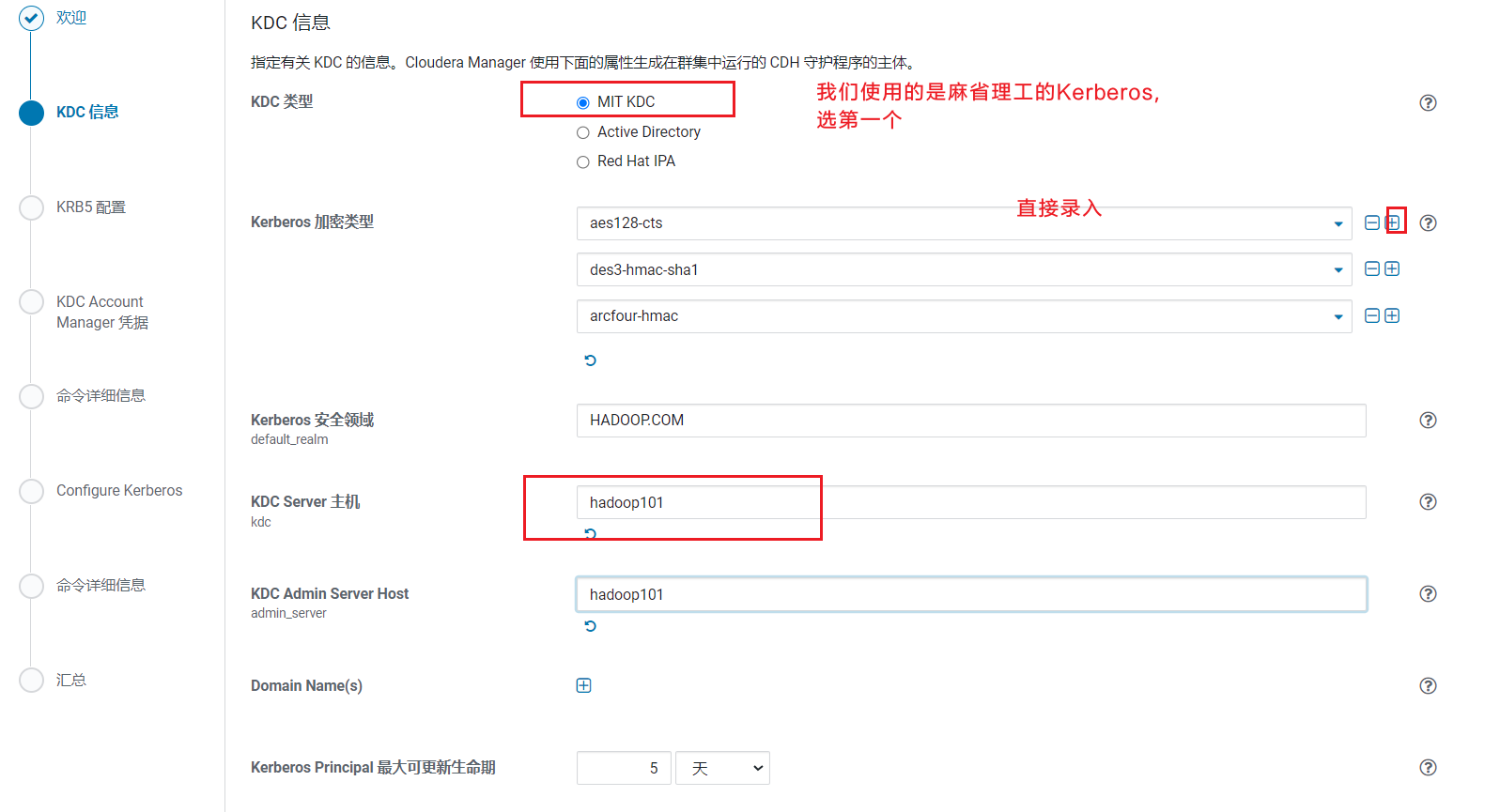

2.3 填写配置

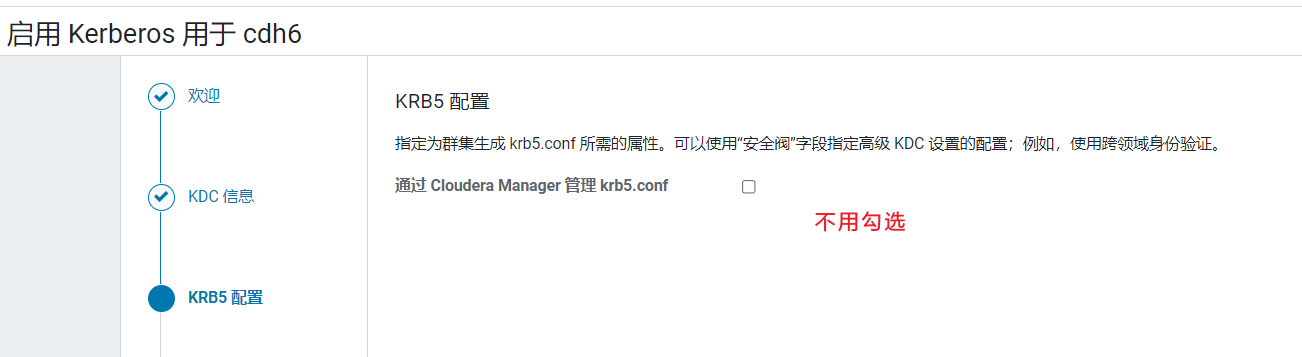

Kerberos加密类型:aes128-cts、des3-hmac-sha1、arcfour-hmac。  点击继续,由于我们前面步骤已经生成krb5.conf文件,不用勾选,点击继续:

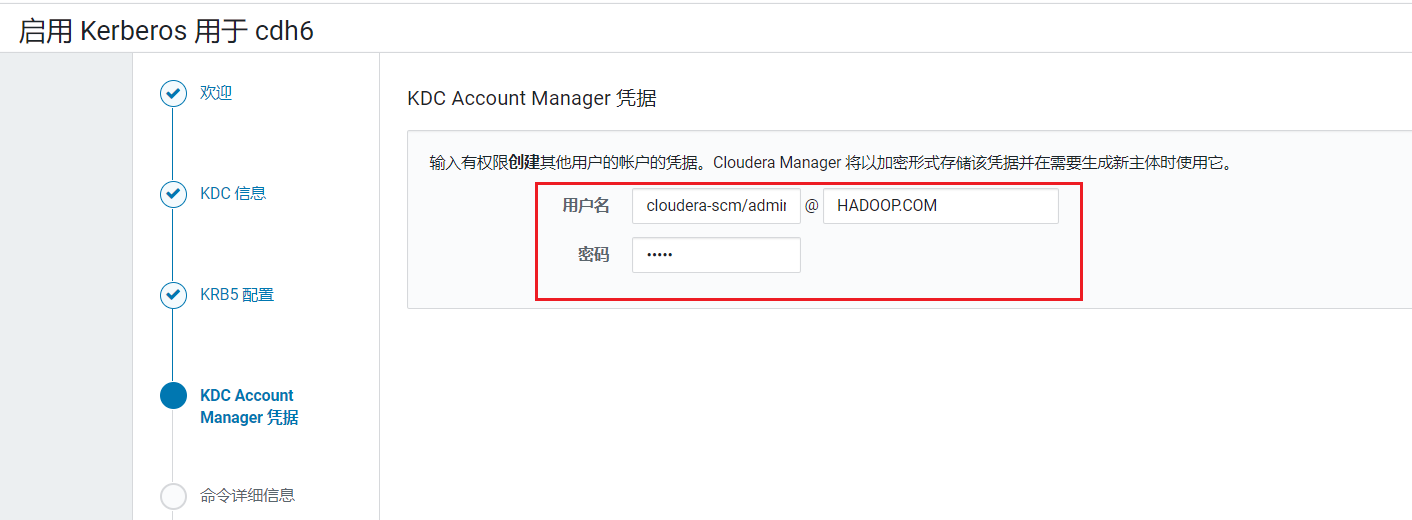

点击继续,由于我们前面步骤已经生成krb5.conf文件,不用勾选,点击继续: 输入刚才我们为CDH创建的主体和密码:

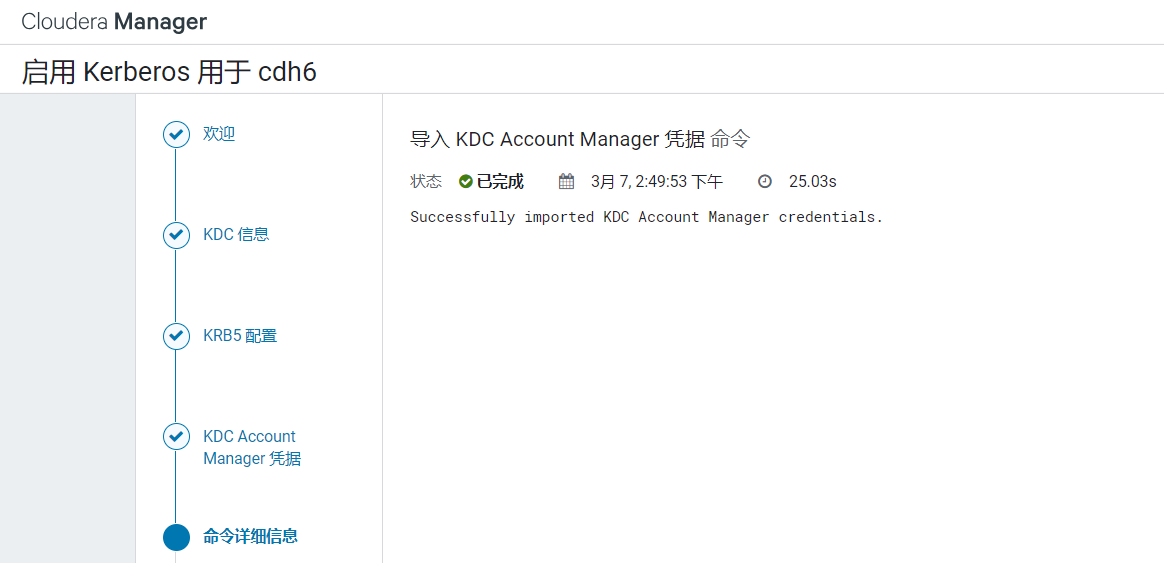

输入刚才我们为CDH创建的主体和密码: 进入密码验证环节,验证完后点击继续:

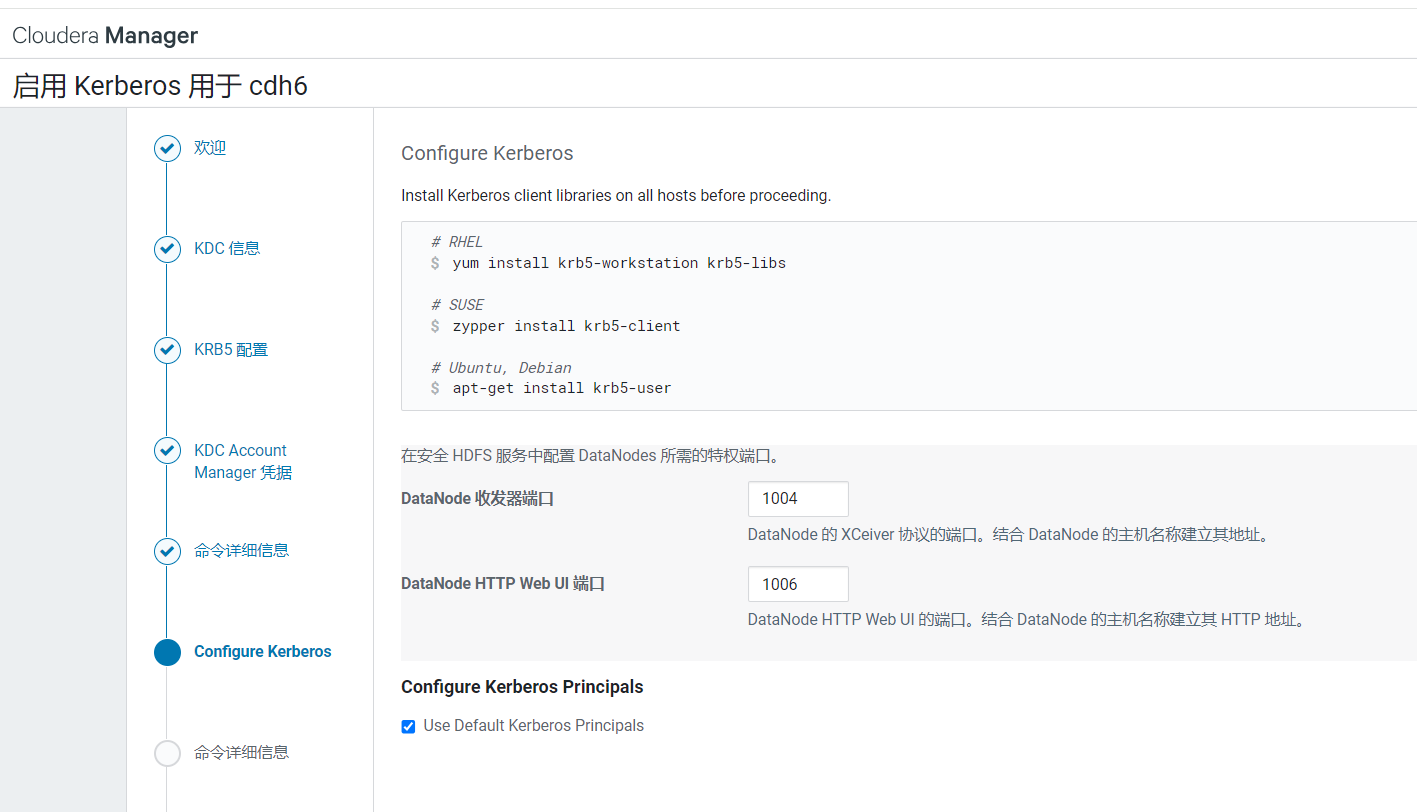

进入密码验证环节,验证完后点击继续: CDH提示它会执行这些命令,点击继续:

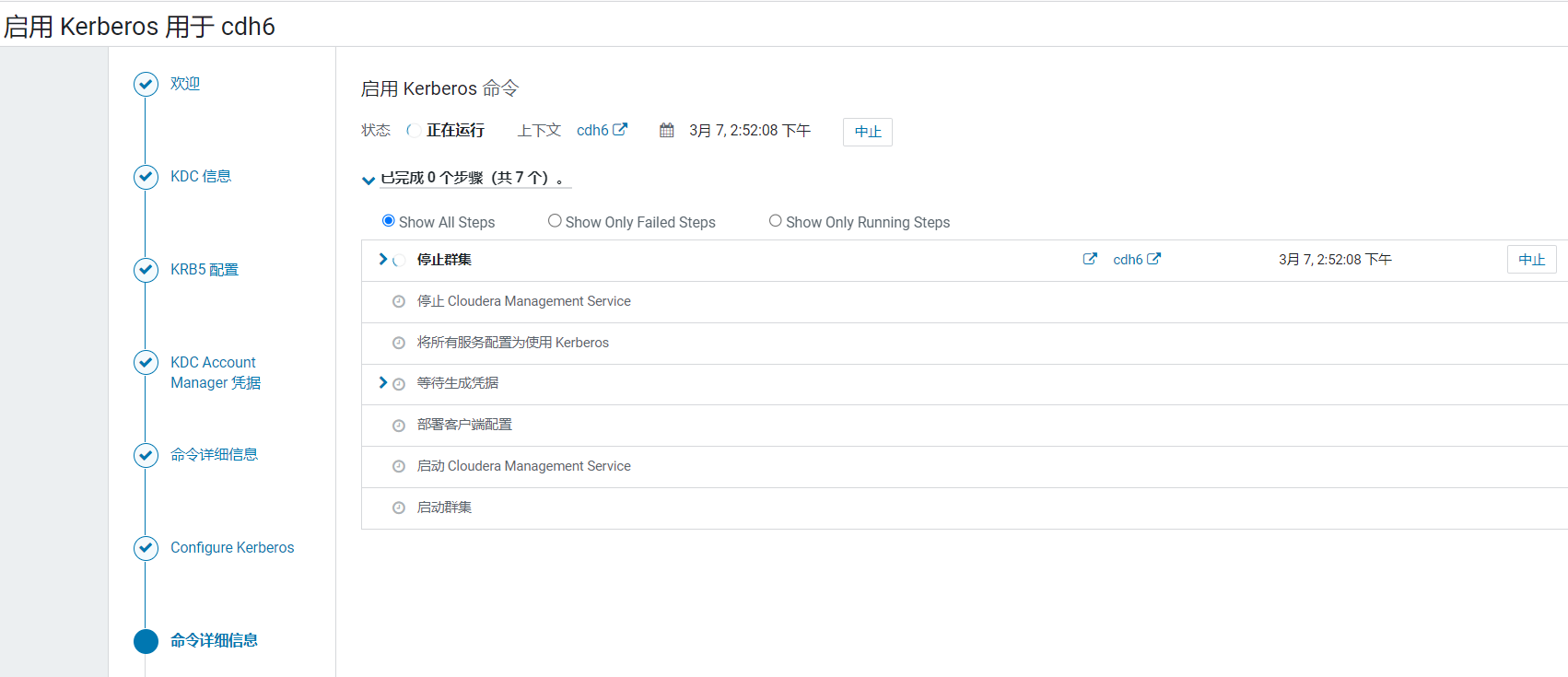

CDH提示它会执行这些命令,点击继续:  CDH开始执行,他会停下整个集群主机然后生成服务主体凭据,比较耗时:

CDH开始执行,他会停下整个集群主机然后生成服务主体凭据,比较耗时: 点击完成:

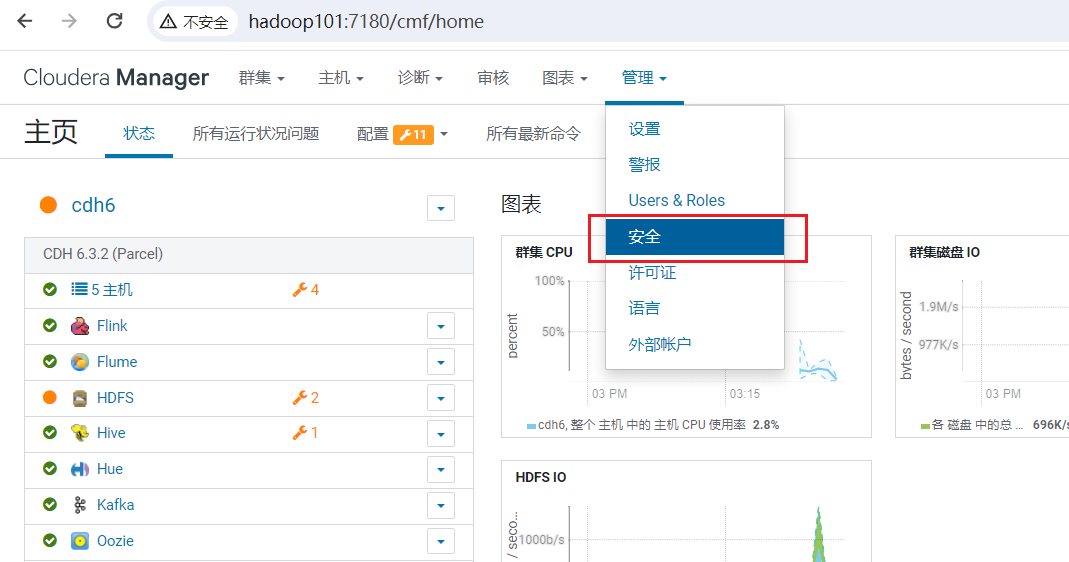

点击完成: 如果要查看当前生成服务主体凭据,可以点击安全:

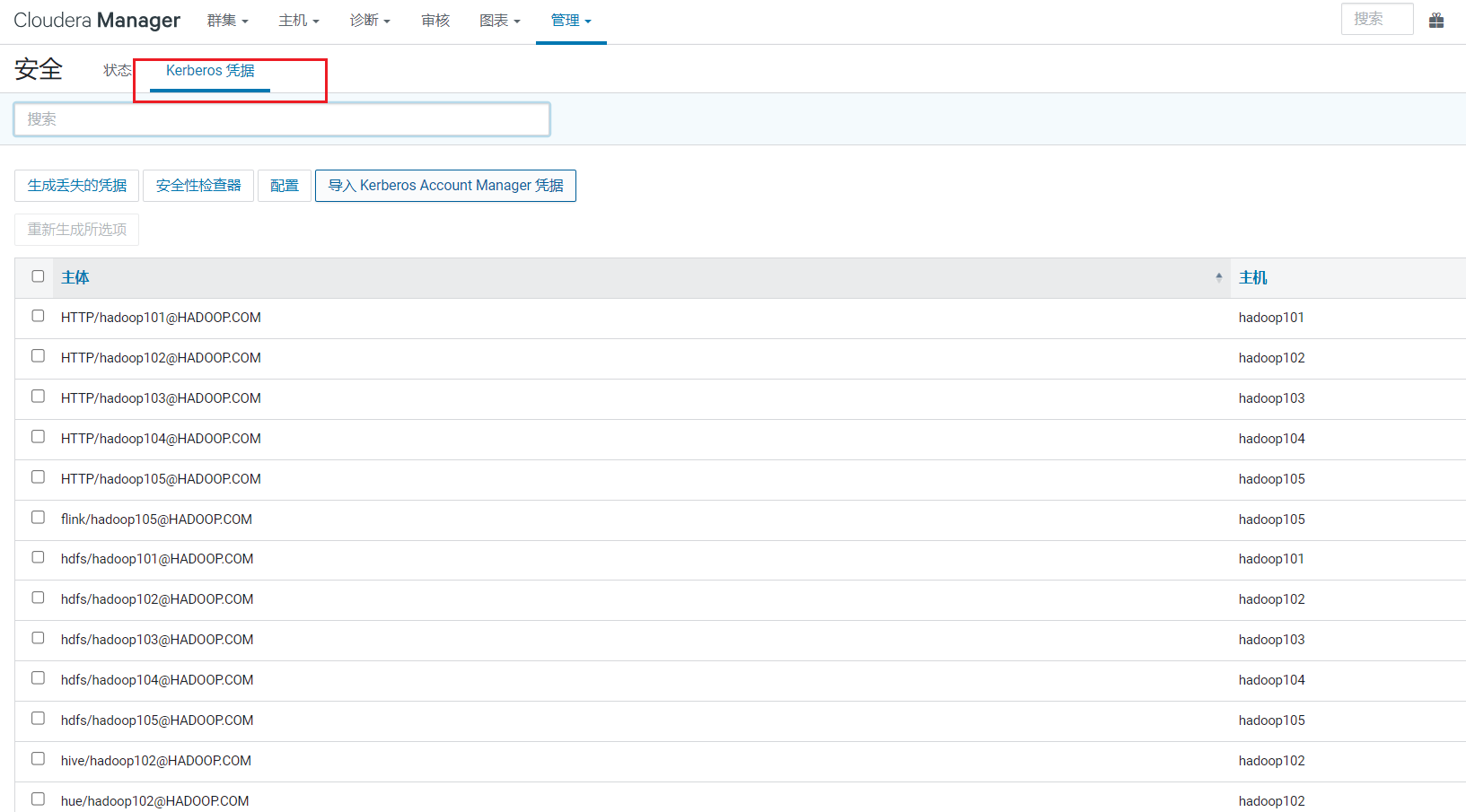

如果要查看当前生成服务主体凭据,可以点击安全: 选择Kerberos凭据菜单,可以看到生成很多:

选择Kerberos凭据菜单,可以看到生成很多:

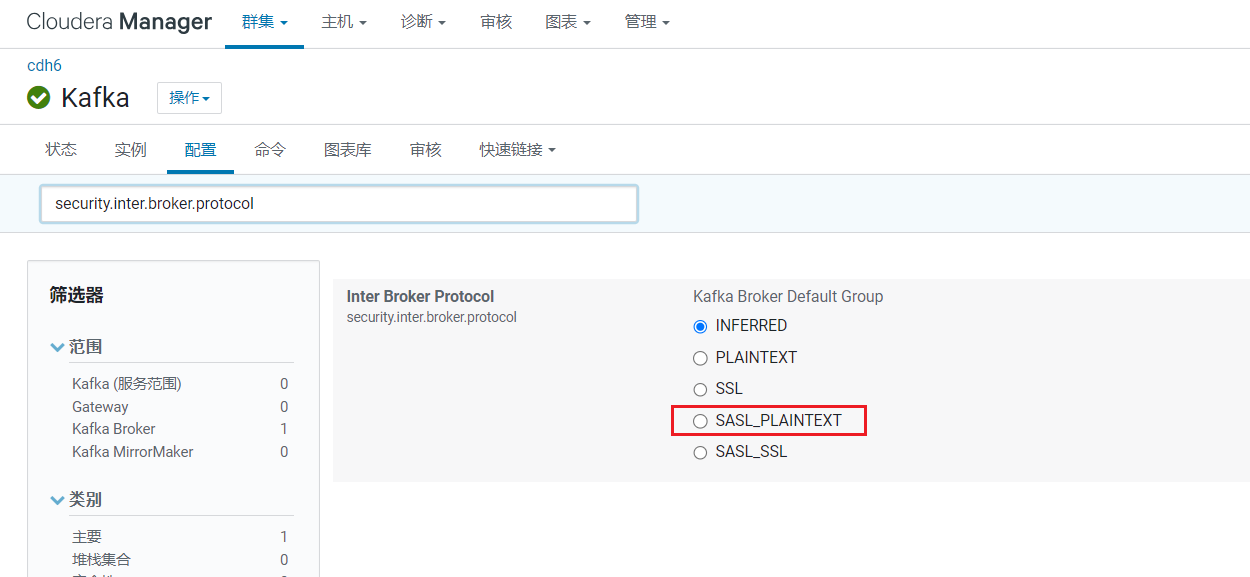

3. 配置Kafka

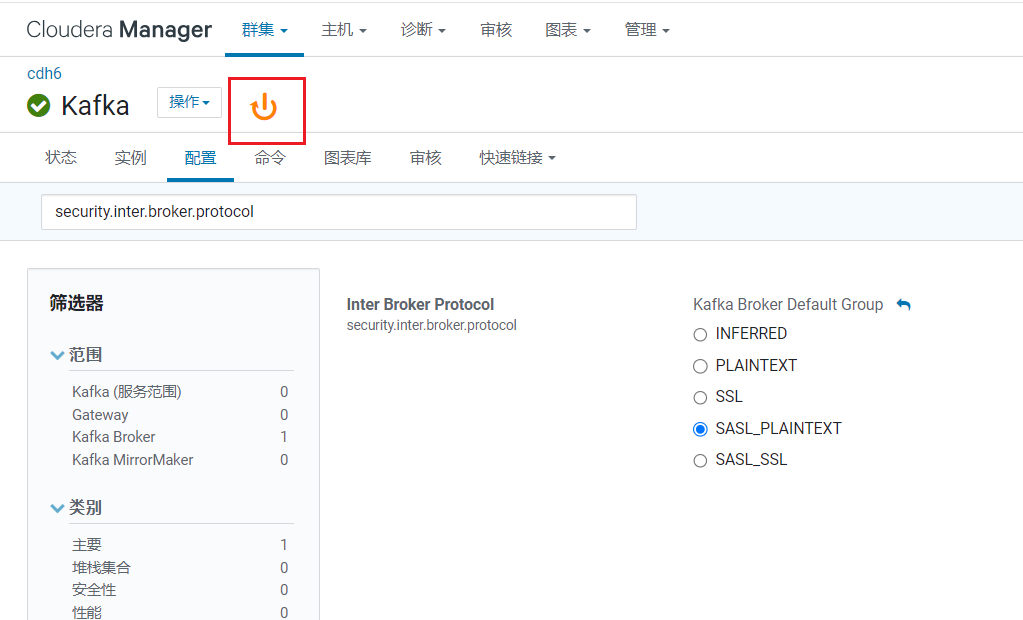

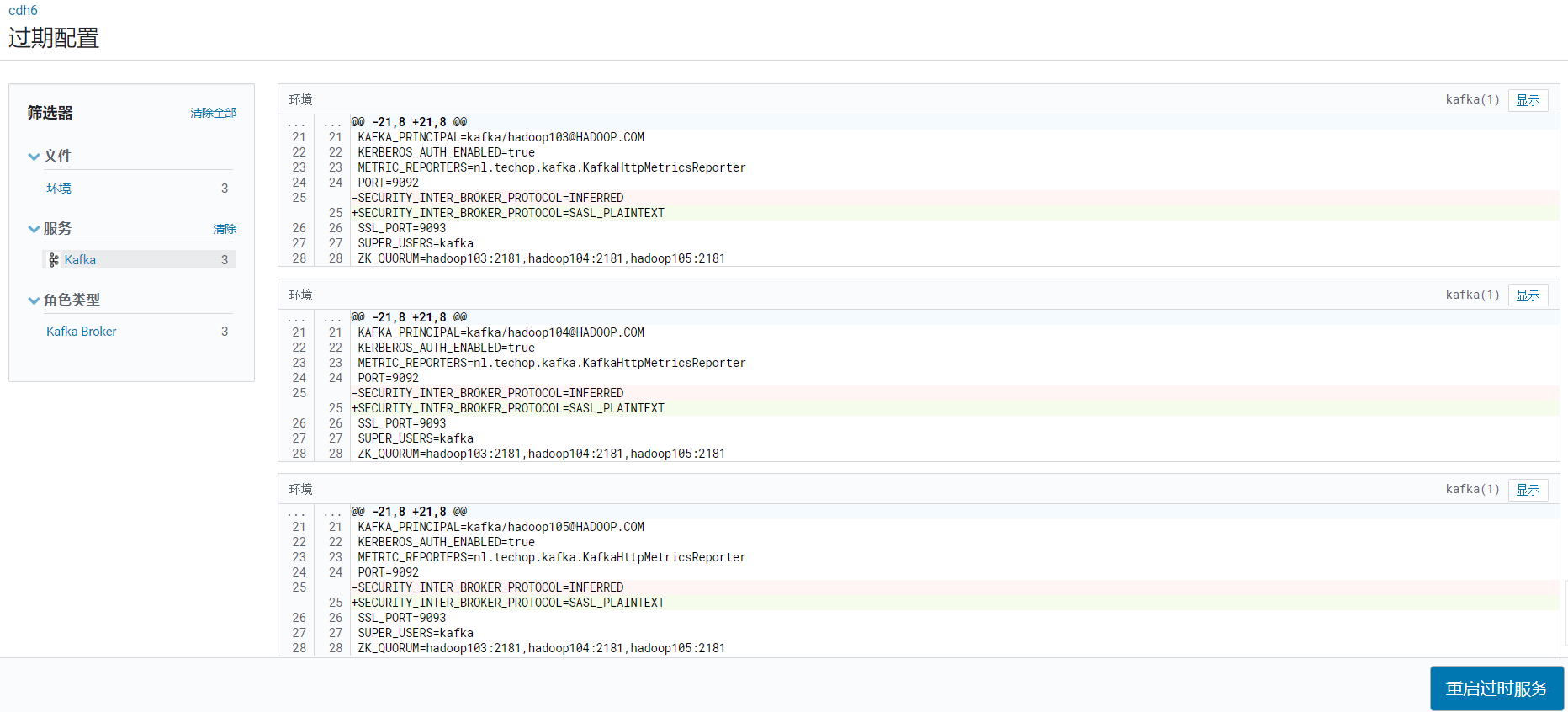

修改Kafka配置,在Kafka的配置项搜索"security.inter.broker.protocol",设置为SALS_PLAINTEXT。  修改完毕后需要重启Kafka:

修改完毕后需要重启Kafka: 确认配置,点击立即重启:

确认配置,点击立即重启: 重启开始执行:

重启开始执行:

4. Kerberos安全环境实操

在启用Kerberos之后,系统与系统(比如flume-kafka)之间的通讯,以及用户与系统(比如user-hdfs)之间的通讯都需要先进行安全认证,认证通过之后方可进行通讯。故在启用Kerberos后,数仓中使用的脚本等,均需要加入一步安全认证的操作,才能正常工作。

开启Kerberos安全认证之后,日常的访问服务(例如访问HDFS,消费Kafka topic等)都需要先进行安全认证。

4.1 用户访问HDFS服务

- 直接访问HDFS

## 在hadoop102上访问hdfs

[root@hadoop102 ~]# hdfs dfs -ls /

25/03/07 15:59:07 WARN ipc.Client: Exception encountered while connecting to the server : org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]

25/03/07 15:59:07 WARN ipc.Client: Exception encountered while connecting to the server : org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]

25/03/07 15:59:07 INFO retry.RetryInvocationHandler: java.io.IOException: Failed on local exception: java.io.IOException: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]; Host Details : local host is: "hadoop102/10.206.0.6"; destination host is: "hadoop102":8020; , while invoking ClientNamenodeProtocolTranslatorPB.getFileInfo over hadoop102/10.206.0.6:8020 after 1 failover attempts. Trying to failover after sleeping for 975ms.可以发现现在无法使用hdfs。 2. 在Kerberos数据库中创建用户主体/实例

[root@hadoop102 ~]# kadmin -p admin/admin -q "addprinc hive/hive@HADOOP.COM"

Authenticating as principal admin/admin with password.

Password for admin/admin@HADOOP.COM:

WARNING: no policy specified for hive/hive@HADOOP.COM; defaulting to no policy

Enter password for principal "hive/hive@HADOOP.COM":

Re-enter password for principal "hive/hive@HADOOP.COM":

Principal "hive/hive@HADOOP.COM" created.- 进行用户认证

[root@hadoop102 ~]# kinit hive/hive@HADOOP.COM

Password for hive/hive@HADOOP.COM:- 访问HDFS

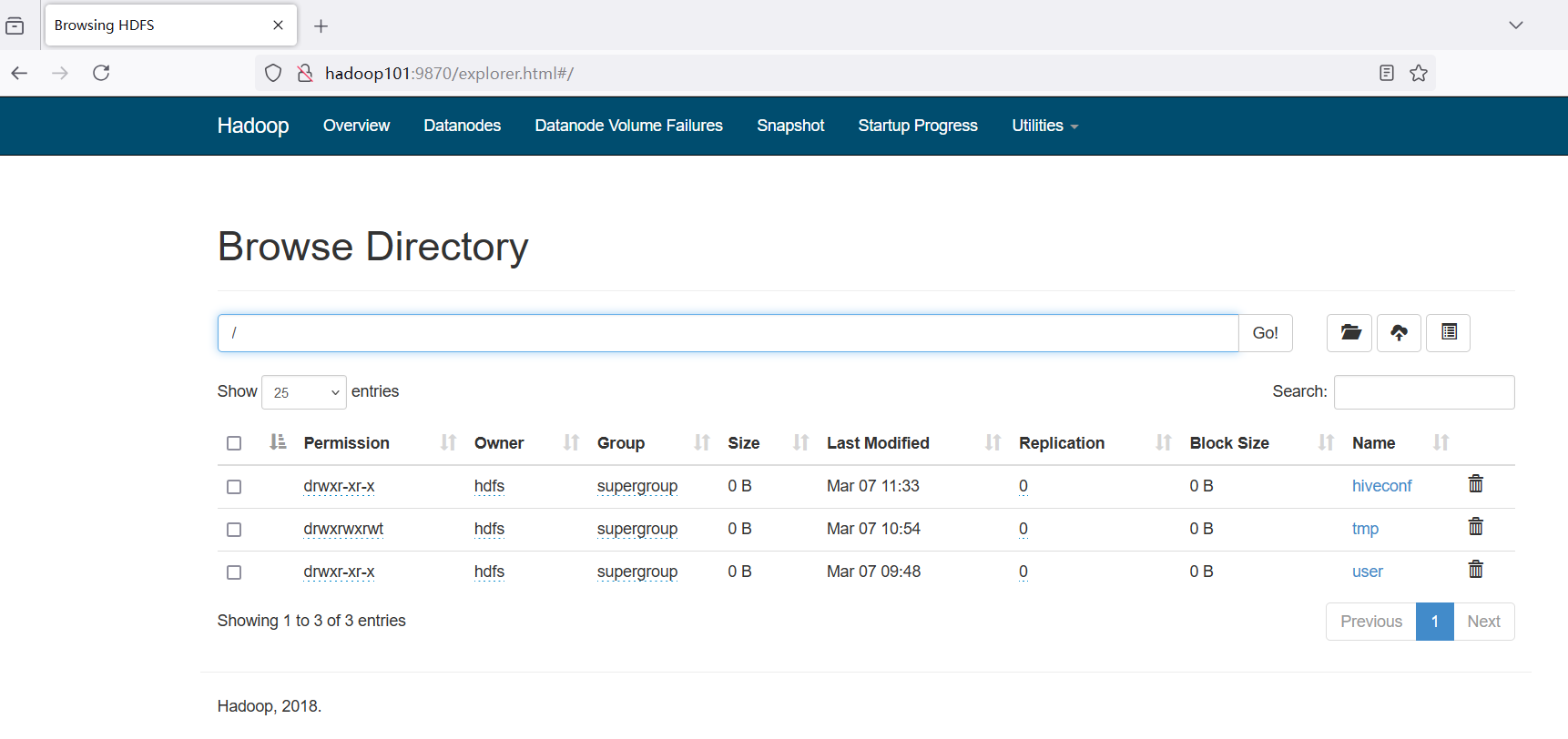

[root@hadoop102 ~]# hdfs dfs -ls /

Found 3 items

drwxr-xr-x - hdfs supergroup 0 2025-03-07 11:33 /hiveconf

drwxrwxrwt - hdfs supergroup 0 2025-03-07 10:54 /tmp

drwxr-xr-x - hdfs supergroup 0 2025-03-07 09:48 /user

## 只要有了TGT,其实所有服务都可以访问了(Kerberos只做认证)

[root@hadoop102 ~]# hive

WARNING: Use "yarn jar" to launch YARN applications.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/opt/cloudera/parcels/CDH-6.3.2-1.cdh6.3.2.p0.1605554/jars/hive-common-2.1.1-cdh6.3.2.jar!/hive-log4j2.properties Async: false

WARNING: Hive CLI is deprecated and migration to Beeline is recommended.

hive>4.2 消费Kafka topic

在hadoop103上访问kafka:

[root@hadoop103 ~]# kafka-topics --bootstrap-server hadoop103:9092,hadoop104:9092,hadoop105:9092 --list

25/03/07 16:17:45 INFO utils.Log4jControllerRegistration$: Registered kafka:type=kafka.Log4jController MBean

25/03/07 16:17:45 INFO admin.AdminClientConfig: AdminClientConfig values:

bootstrap.servers = [hadoop103:9092, hadoop104:9092, hadoop105:9092]

client.dns.lookup = default

client.id =

connections.max.idle.ms = 300000

metadata.max.age.ms = 300000

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

receive.buffer.bytes = 65536

reconnect.backoff.max.ms = 1000

reconnect.backoff.ms = 50

request.timeout.ms = 120000

retries = 5

retry.backoff.ms = 100

sasl.client.callback.handler.class = null

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism = GSSAPI

security.protocol = PLAINTEXT

send.buffer.bytes = 131072

ssl.cipher.suites = null

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = null

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

25/03/07 16:17:45 INFO utils.AppInfoParser: Kafka version: 2.2.1-cdh6.3.2

25/03/07 16:17:45 INFO utils.AppInfoParser: Kafka commitId: unknown

25/03/07 16:17:47 INFO internals.AdminMetadataManager: [AdminClient clientId=adminclient-1] Metadata update failed

org.apache.kafka.common.errors.DisconnectException: Cancelled fetchMetadata request with correlation id 11 due to node -1 being disconnected

25/03/07 16:17:50 INFO internals.AdminMetadataManager: [AdminClient clientId=adminclient-1] Metadata update failed

org.apache.kafka.common.errors.DisconnectException: Cancelled fetchMetadata request with correlation id 23 due to node -1 being disconnected

25/03/07 16:17:52 INFO internals.AdminMetadataManager: [AdminClient clientId=adminclient-1] Metadata update failed

org.apache.kafka.common.errors.DisconnectException: Cancelled fetchMetadata request with correlation id 35 due to node -3 being disconnected

25/03/07 16:17:54 INFO internals.AdminMetadataManager: [AdminClient clientId=adminclient-1] Metadata update failed

org.apache.kafka.common.errors.DisconnectException: Cancelled fetchMetadata request with correlation id 47 due to node -1 being disconnected

25/03/07 16:17:57 INFO internals.AdminMetadataManager: [AdminClient clientId=adminclient-1] Metadata update failed

org.apache.kafka.common.errors.DisconnectException: Cancelled fetchMetadata request with correlation id 59 due to node -3 being disconnected

25/03/07 16:17:59 INFO internals.AdminMetadataManager: [AdminClient clientId=adminclient-1] Metadata update failed

org.apache.kafka.common.errors.DisconnectException: Cancelled fetchMetadata request with correlation id 71 due to node -3 being disconnected可以看到hadoop103连不上了Kafka,创建一个kafka/kafka的用户(之前创建的hive/hive也可以用,但是避免混乱)

[root@hadoop103 ~]# kadmin -p admin/admin -q "addprinc kafka/kafka@HADOOP.COM"

Authenticating as principal admin/admin with password.

Password for admin/admin@HADOOP.COM:

WARNING: no policy specified for kafka/kafka@HADOOP.COM; defaulting to no policy

Enter password for principal "kafka/kafka@HADOOP.COM":

Re-enter password for principal "kafka/kafka@HADOOP.COM":

Principal "kafka/kafka@HADOOP.COM" created.然后进行用户认证

[root@hadoop103 ~]# kinit kafka/kafka@HADOOP.COM

Password for kafka/kafka@HADOOP.COM:再次执行kafka消费topic,发现会卡住:

[root@hadoop103 ~]# kafka-console-consumer --bootstrap-server hadoop103:9092 --topic test1 --from-beginning

25/03/07 17:33:45 INFO utils.Log4jControllerRegistration$: Registered kafka:type=kafka.Log4jController MBean

25/03/07 17:33:45 INFO consumer.ConsumerConfig: ConsumerConfig values:

auto.commit.interval.ms = 5000

auto.offset.reset = earliest

bootstrap.servers = [hadoop103:9092]

check.crcs = true

client.dns.lookup = default

client.id =

connections.max.idle.ms = 540000

default.api.timeout.ms = 60000

enable.auto.commit = false

exclude.internal.topics = true

fetch.max.bytes = 52428800

fetch.max.wait.ms = 500

fetch.min.bytes = 1

group.id = console-consumer-94525

heartbeat.interval.ms = 3000

interceptor.classes = []

internal.leave.group.on.close = true

isolation.level = read_uncommitted

key.deserializer = class org.apache.kafka.common.serialization.ByteArrayDeserializer

max.partition.fetch.bytes = 1048576

max.poll.interval.ms = 300000

max.poll.records = 500

metadata.max.age.ms = 300000

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

partition.assignment.strategy = [class org.apache.kafka.clients.consumer.RangeAssignor]

receive.buffer.bytes = 65536

reconnect.backoff.max.ms = 1000

reconnect.backoff.ms = 50

request.timeout.ms = 30000

retry.backoff.ms = 100

sasl.client.callback.handler.class = null

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism = GSSAPI

security.protocol = PLAINTEXT

send.buffer.bytes = 131072

session.timeout.ms = 10000

ssl.cipher.suites = null

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = null

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

value.deserializer = class org.apache.kafka.common.serialization.ByteArrayDeserializer

25/03/07 17:33:45 INFO utils.AppInfoParser: Kafka version: 2.2.1-cdh6.3.2

25/03/07 17:33:45 INFO utils.AppInfoParser: Kafka commitId: unknown

25/03/07 17:33:45 INFO consumer.KafkaConsumer: [Consumer clientId=consumer-1, groupId=console-consumer-94525] Subscribed to topic(s): test1原因在于需要让Java识别到Kerberbos:创建jaas.conf文件(java认证授权服务)

[root@hadoop103 hive]# vim /var/lib/hive/jaas.conf

## 文件内容如下

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

useTicketCache=true;

};创建consumer.properties文件

[root@hadoop103 conf]# vim /etc/kafka/conf/consumer.properties

## 文件内容如下

security.protocol=SASL_PLAINTEXT

sasl.kerberos.service.name=kafka声明jaas.conf文件路径

## 使用临时变量

export KAFKA_OPTS="-Djava.security.auth.login.config=/var/lib/hive/jaas.conf"提示

永久声明jaas.conf文件路径:

[root@hadoop103 ~]# vim /etc/profile.d/my_env.sh

## 添加如下内容

export KAFKA_OPTS="-Djava.security.auth.login.config=/var/lib/hive/jaas.conf"

[root@hadoop103 ~]# source /etc/profile.d/my_env.sh使用kafka-console-consumer消费Kafka topic数据

[root@hadoop103 ~]# kafka-console-consumer --bootstrap-server hadoop103:9092 --topic test1 --from-beginning --consumer.config /etc/kafka/conf/consumer.properties

25/03/07 17:33:27 INFO utils.Log4jControllerRegistration$: Registered kafka:type=kafka.Log4jController MBean

25/03/07 17:33:27 INFO consumer.ConsumerConfig: ConsumerConfig values:

auto.commit.interval.ms = 5000

auto.offset.reset = earliest

bootstrap.servers = [hadoop103:9092]

check.crcs = true

client.dns.lookup = default

client.id =

connections.max.idle.ms = 540000

default.api.timeout.ms = 60000

enable.auto.commit = false

exclude.internal.topics = true

fetch.max.bytes = 52428800

fetch.max.wait.ms = 500

fetch.min.bytes = 1

group.id = console-consumer-56024

heartbeat.interval.ms = 3000

interceptor.classes = []

internal.leave.group.on.close = true

isolation.level = read_uncommitted

key.deserializer = class org.apache.kafka.common.serialization.ByteArrayDeserializer

max.partition.fetch.bytes = 1048576

max.poll.interval.ms = 300000

max.poll.records = 500

metadata.max.age.ms = 300000

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

partition.assignment.strategy = [class org.apache.kafka.clients.consumer.RangeAssignor]

receive.buffer.bytes = 65536

reconnect.backoff.max.ms = 1000

reconnect.backoff.ms = 50

request.timeout.ms = 30000

retry.backoff.ms = 100

sasl.client.callback.handler.class = null

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.service.name = kafka

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism = GSSAPI

security.protocol = SASL_PLAINTEXT

send.buffer.bytes = 131072

session.timeout.ms = 10000

ssl.cipher.suites = null

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = null

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

value.deserializer = class org.apache.kafka.common.serialization.ByteArrayDeserializer

25/03/07 17:33:27 INFO authenticator.AbstractLogin: Successfully logged in.

25/03/07 17:33:27 INFO kerberos.KerberosLogin: [Principal=null]: TGT refresh thread started.

25/03/07 17:33:27 INFO kerberos.KerberosLogin: [Principal=null]: TGT valid starting at: Fri Mar 07 17:27:02 CST 2025

25/03/07 17:33:27 INFO kerberos.KerberosLogin: [Principal=null]: TGT expires: Sat Mar 08 17:27:02 CST 2025

25/03/07 17:33:27 INFO kerberos.KerberosLogin: [Principal=null]: TGT refresh sleeping until: Sat Mar 08 13:33:48 CST 2025

25/03/07 17:33:27 INFO utils.AppInfoParser: Kafka version: 2.2.1-cdh6.3.2

25/03/07 17:33:27 INFO utils.AppInfoParser: Kafka commitId: unknown

25/03/07 17:33:27 INFO consumer.KafkaConsumer: [Consumer clientId=consumer-1, groupId=console-consumer-56024] Subscribed to topic(s): test1

25/03/07 17:33:27 INFO clients.Metadata: Cluster ID: DKemVPy9SjmVSj2yXx0o1w

25/03/07 17:33:28 INFO internals.AbstractCoordinator: [Consumer clientId=consumer-1, groupId=console-consumer-56024] Discovered group coordinator hadoop105:9092 (id: 2147483599 rack: null)

25/03/07 17:33:28 INFO internals.ConsumerCoordinator: [Consumer clientId=consumer-1, groupId=console-consumer-56024] Revoking previously assigned partitions []

25/03/07 17:33:28 INFO internals.AbstractCoordinator: [Consumer clientId=consumer-1, groupId=console-consumer-56024] (Re-)joining group

25/03/07 17:33:28 INFO internals.AbstractCoordinator: [Consumer clientId=consumer-1, groupId=console-consumer-56024] (Re-)joining group

25/03/07 17:33:31 INFO internals.AbstractCoordinator: [Consumer clientId=consumer-1, groupId=console-consumer-56024] Successfully joined group with generation 1

25/03/07 17:33:31 INFO internals.ConsumerCoordinator: [Consumer clientId=consumer-1, groupId=console-consumer-56024] Setting newly assigned partitions: test1-0

25/03/07 17:33:31 INFO internals.Fetcher: [Consumer clientId=consumer-1, groupId=console-consumer-56024] Resetting offset for partition test1-0 to offset 0.由于topic为test1中没有数据,offset0开始没有打印任何内容。

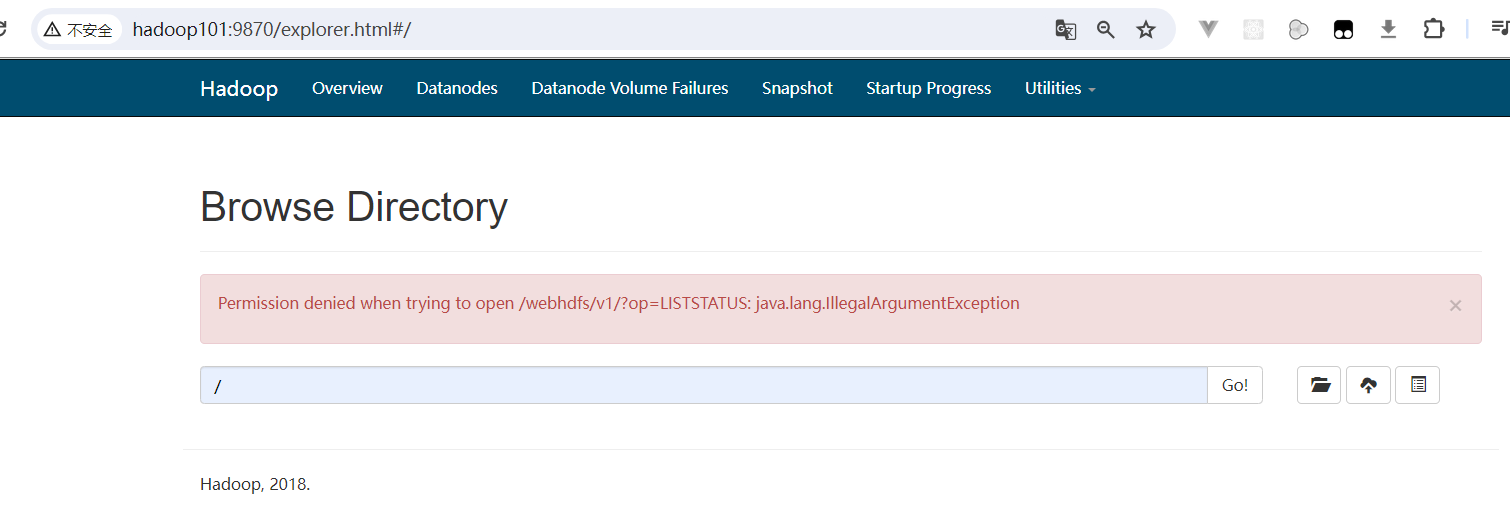

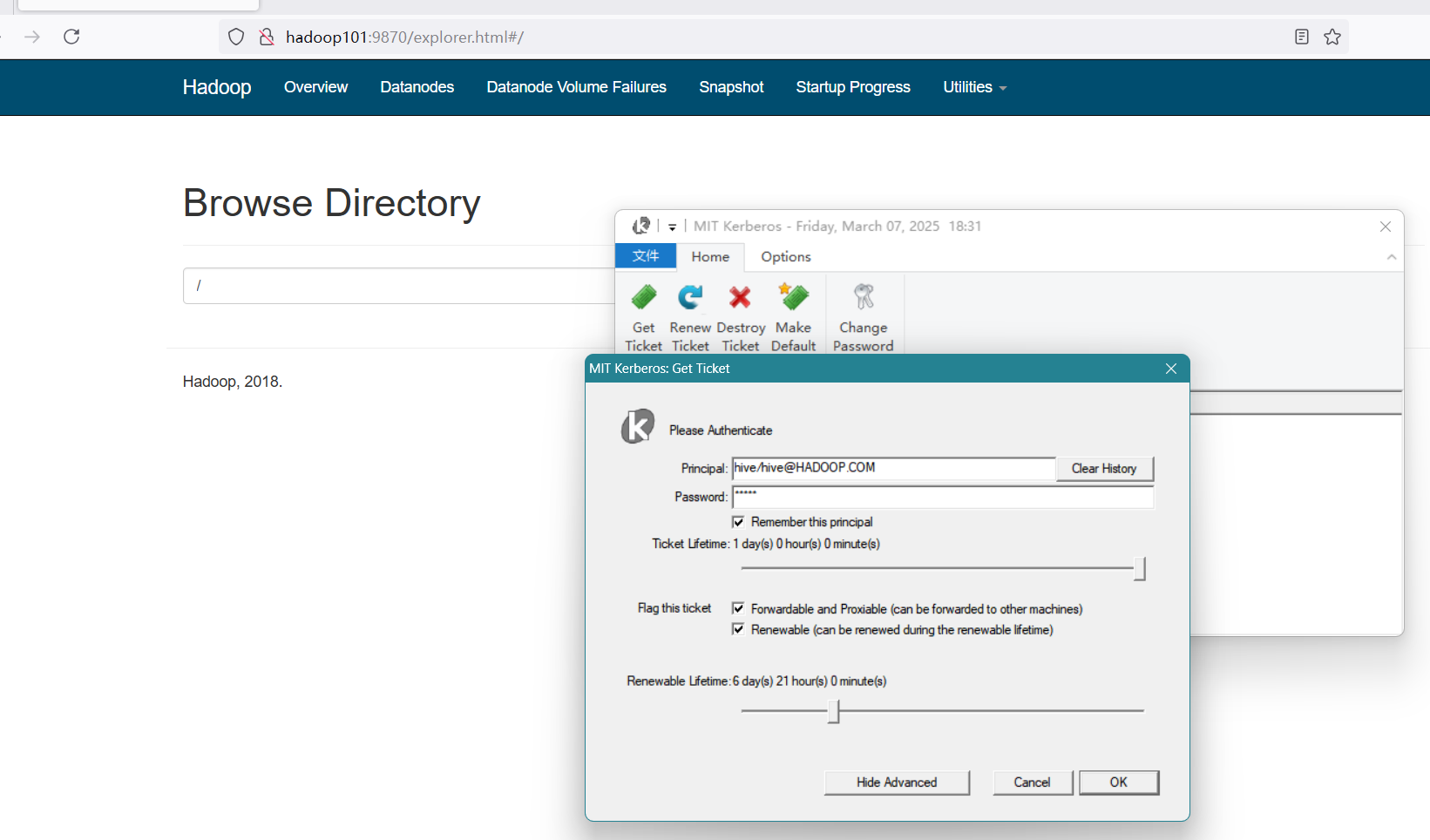

4.3 HDFS WebUI浏览器认证

我们设置CDH支持kerberos后会出现下图所示的情况:  可以登录9870,但是不能查看目录及文件,这是由于我们本地环境没有通过认证。接下来我们设置本地验证。

可以登录9870,但是不能查看目录及文件,这是由于我们本地环境没有通过认证。接下来我们设置本地验证。

提示

由于浏览器限制问题,我们这里使用火狐浏览器,其他如:谷歌,ie等均会出现问题。

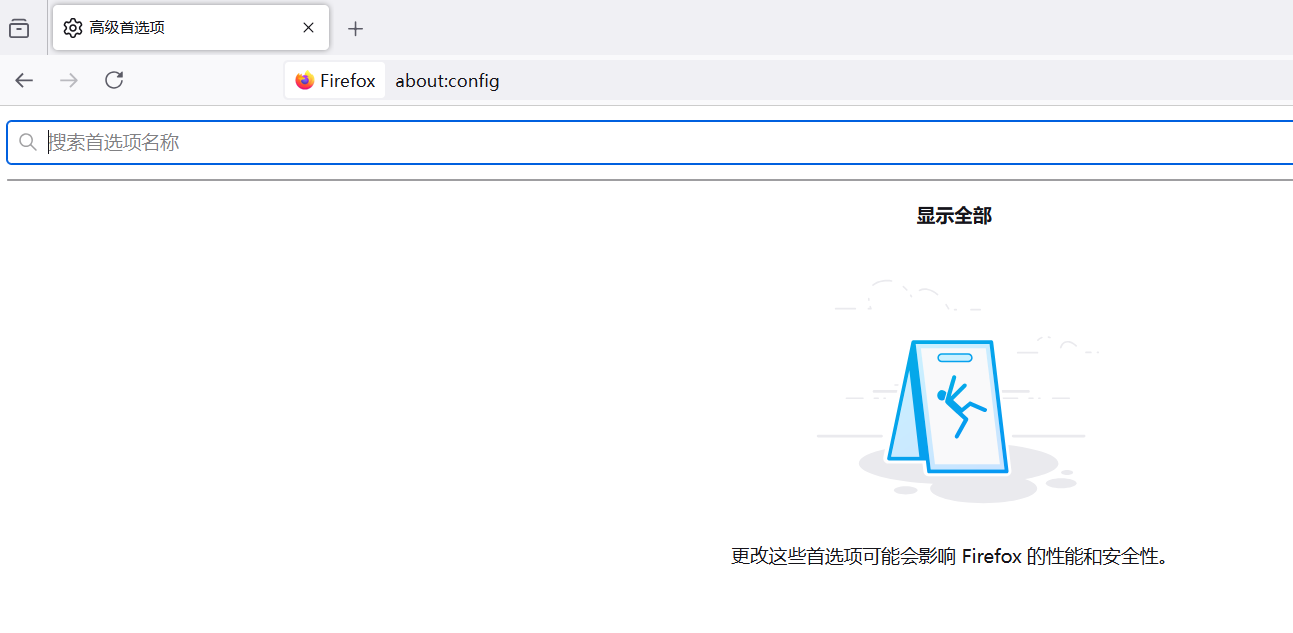

打开火狐浏览器,在地址栏输入:about:config。  点击接收风险并继续进入设置页面:

点击接收风险并继续进入设置页面: 搜索

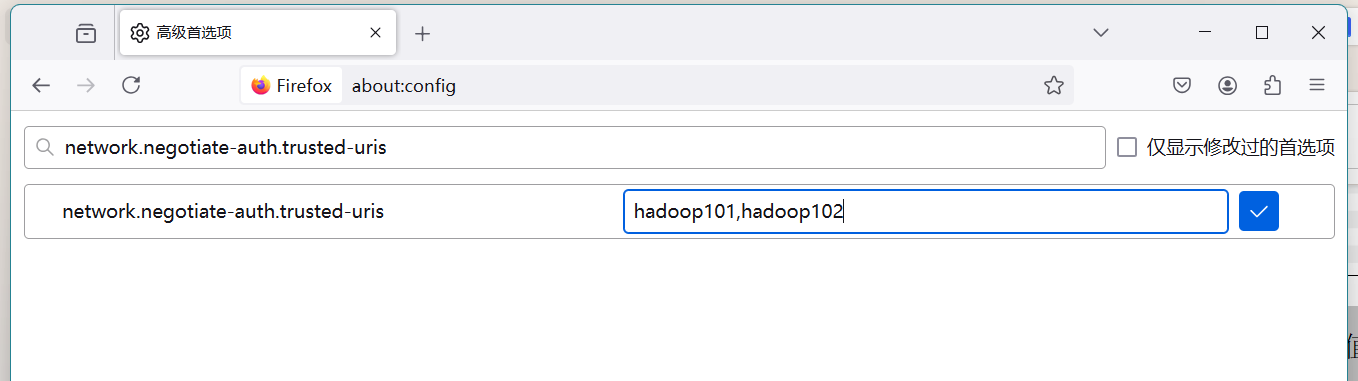

搜索network.negotiate-auth.trusted-uris,修改值为自己的服务器主机名(由于这里配置了HA,所以必须写两个域名地址)。  搜索

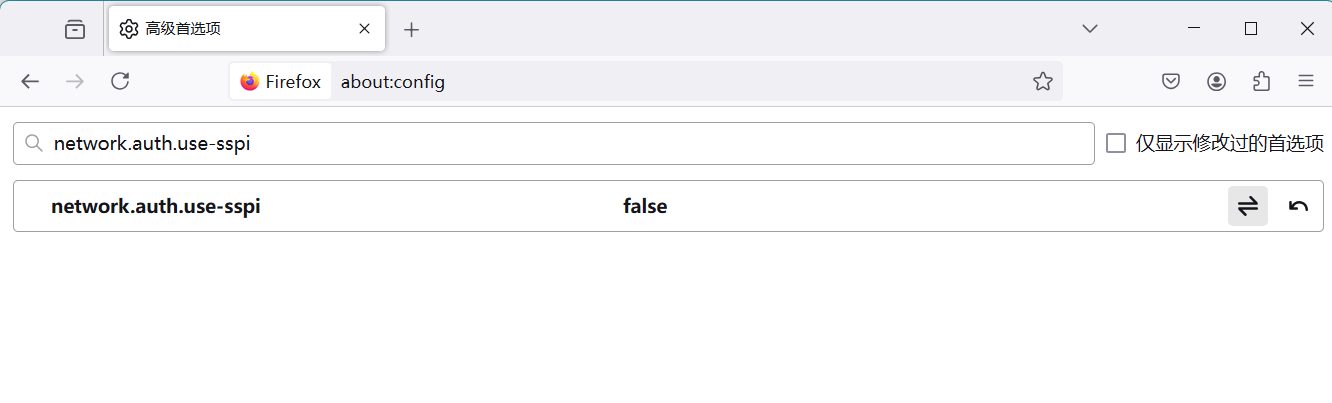

搜索network.auth.use-sspi,双击将值变为false。  安装Kerberos的window客户端, 执行安装,网上寻找安装包kfw-4.1-amd64.msi,

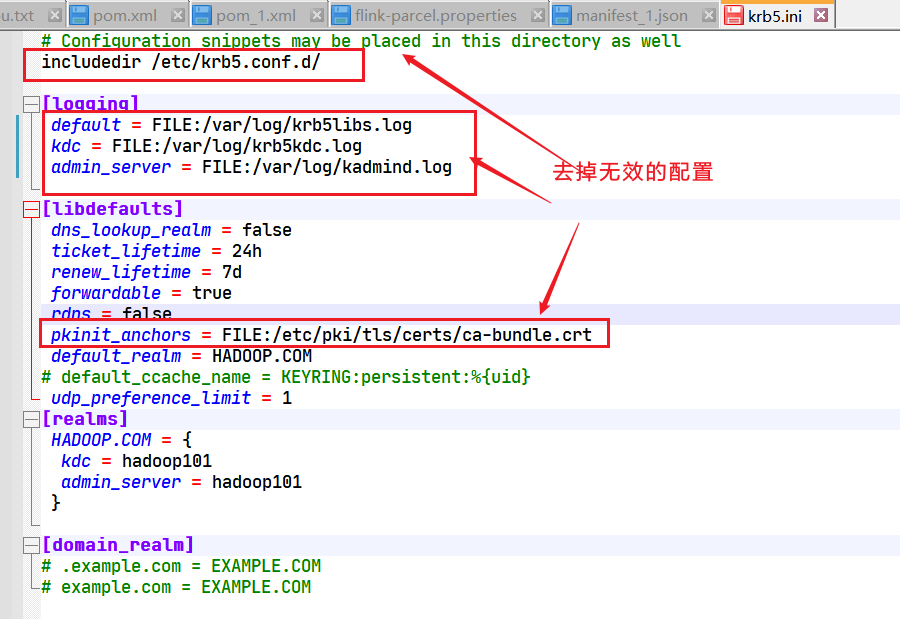

安装Kerberos的window客户端, 执行安装,网上寻找安装包kfw-4.1-amd64.msi,  然后将集群的/etc/krb5.conf文件的内容复制到C:\ProgramData\MIT\Kerberos5\krb.ini中。

然后将集群的/etc/krb5.conf文件的内容复制到C:\ProgramData\MIT\Kerberos5\krb.ini中。  最终配置如下:

最终配置如下:

[logging]

[libdefaults]

dns_lookup_realm = false

ticket_lifetime = 24h

dns_lookup_kdc = false

renew_lifetime = 7d

forwardable = true

rdns = false

default_realm = HADOOP.COM

udp_preference_limit = 1

[realms]

HADOOP.COM = {

kdc = hadoop101

admin_server = hadoop101

}

[domain_realm]安装Kerberos的window客户端后,启动客户端,并重启火狐浏览器,访问http://hadoop101:9870 输入主体名和密码,刷新页面即可:

输入主体名和密码,刷新页面即可: