数仓代码框架和环境搭建

1. 创建项目工程

创建maven工程gmall2023-realtime,工程的pom:

<modules>

<module>realtime-common</module>

<module>realtime-dwd</module>

<module>realtime-dws</module>

<module>realtime-dim</module>

</modules>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<java.version>1.8</java.version>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<flink.version>1.17.2</flink.version>

<scala.version>2.12</scala.version>

<hadoop.version>3.3.6</hadoop.version>

<flink-cdc.vesion>2.4.2</flink-cdc.vesion>

<jdbc-connector.version>3.1.0-1.17</jdbc-connector.version>

<fastjson.version>1.2.83</fastjson.version>

<hbase.version>2.6.1-hadoop3</hbase.version>

<slf4j.version>1.7.36</slf4j.version>

</properties>

<dependencies>

<!-- 每个module都需要的公共依赖,module会自动继承-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<!--在 idea 运行的时候,可以打开 web 页面-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-runtime-web</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-json</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-csv</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<!--Flink默认使用的是slf4j记录日志,相当于一个日志的接口,我们这里使用log4j作为具体的日志实现-->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>${slf4j.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>${slf4j.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-to-slf4j</artifactId>

<version>2.21.1</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-java-bridge</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-loader</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-runtime</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-files</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

</dependencies>

<dependencyManagement>

<!-- 工程统一管理依赖版本,module指定才会引入,引入时不需要指定version-->

<dependencies>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>${fastjson.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>${flink-cdc.vesion}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-hbase-2.2</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.doris</groupId>

<artifactId>flink-doris-connector-1.17</artifactId>

<version>1.6.2</version>

</dependency>

<!-- hbase 依赖-->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>${hbase.version}</version>

</dependency>

<dependency>

<groupId>commons-beanutils</groupId>

<artifactId>commons-beanutils</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>com.janeluo</groupId>

<artifactId>ikanalyzer</artifactId>

<version>2012_u6</version>

</dependency>

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>3.3.0</version>

</dependency>

<dependency>

<groupId>io.lettuce</groupId>

<artifactId>lettuce-core</artifactId>

<version>6.3.2.RELEASE</version>

</dependency>

</dependencies>

</dependencyManagement>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>3.1.1</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<artifactSet>

<excludes>

<exclude>com.google.code.findbugs:jsr305</exclude>

<exclude>org.slf4j:*</exclude>

<exclude>log4j:*</exclude>

<exclude>org.apache.hadoop:*</exclude>

</excludes>

</artifactSet>

<filters>

<filter>

<!-- Do not copy the signatures in the META-INF folder.Otherwise, this might cause SecurityExceptions when using the JAR. -->

<!-- 打包时不复制META-INF下的签名文件,避免报非法签名文件的SecurityExceptions异常-->

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers combine.children="append">

<!-- The service transformer is needed to merge META-INF/services files -->

<!-- connector和format依赖的工厂类打包时会相互覆盖,需要使用ServicesResourceTransformer解决-->

<transformer

implementation="org.apache.maven.plugins.shade.resource.ServicesResourceTransformer"/>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>父工程将打包时不必包含在内的依赖添加到父工程的pom文件中,这部分依赖的scope均为provided,在父工程的POM文件中通过dependencyManagement标签声明所有依赖的版本。

2. 项目模块设计

本项目准备一个父工程,父工程下创建四个module如下:

(1)realtime-common:用于引入公共的第三方依赖,编写工具类、实体类等。

(2)realtime-dim:用于编写DIM层业务代码。

(3)realtime-dwd:用于编写DWD层业务代码。

(4)realtime-dws:用于编写DWS层业务代码。

其中,后三个module统称为业务模块,业务模块都要将realtime-common模块作为依赖引入。

2.1 realtime-common工程介绍

本项目需要引入大量依赖,除去父类中引入的部分(集群已有的依赖),还有很多集群没有的依赖(下文称为第三方依赖),打包时都要包含在jar包里,这样就会导致业务模块打包时jar包很大,打包时间很长,部署效率不高。为了改善这一情况,我们构建公共模块realtime-common,将第三方依赖都在公共模块的POM文件中引入,业务模块只需要引入realtime-common依赖即可。但要注意,如果realtime-common的scope为默认的compile,业务模块打包时依然会包含所有的第三方依赖,不能解决问题,所以我们将scope更改为provided,并将打好的realtime-common包放到集群Flink的lib目录下。

同时为了精简子模块的代码,我们将基类、工具类、实体类、常量类、通用的自定义函数全部放到realtime-common中。 realtime-common的pom如下:

<dependencies>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka</artifactId>

</dependency>

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

</dependency>

<!-- hbase 依赖-->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-hbase-2.2</artifactId>

</dependency>

<dependency>

<groupId>org.apache.doris</groupId>

<artifactId>flink-doris-connector-1.17</artifactId>

</dependency>

<dependency>

<groupId>commons-beanutils</groupId>

<artifactId>commons-beanutils</artifactId>

</dependency>

<dependency>

<groupId>io.lettuce</groupId>

<artifactId>lettuce-core</artifactId>

</dependency>

</dependencies>在resources目录下创建log4j.properties文件:

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.target=System.out

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss} %10p (%c:%M) - %m%n

log4j.rootLogger=error,stdoutFlink使用log4j作为默认的日志框架,启动应用程序,控制台会打印日志,在common模块的resources目录下准备一份log4j配置文件即可对所有模块生效。

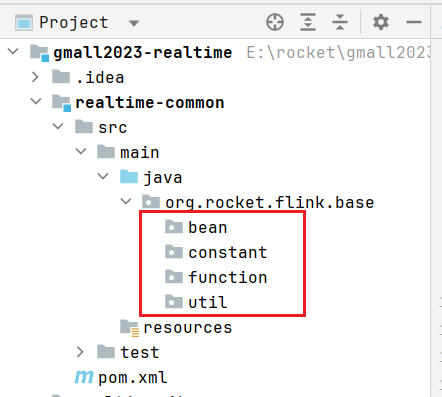

在realtime-common包下创建如下包结构:

2.2 创建子模块realtime-dim

业务模块只需要依赖realtime-common即可:

<dependencies>

<dependency>

<groupId>org.rocket.flink</groupId>

<artifactId>realtime-common</artifactId>

<version>1.0-SNAPSHOT</version>

</dependency>

</dependencies>2.3 创建子模块realtime-dwd

业务模块只需要依赖realtime-common即可:

<dependencies>

<dependency>

<groupId>org.rocket.flink</groupId>

<artifactId>realtime-common</artifactId>

<version>1.0-SNAPSHOT</version>

</dependency>

</dependencies>2.4 创建子模块realtime-dws

业务模块只需要依赖realtime-common即可:

<dependencies>

<dependency>

<groupId>org.rocket.flink</groupId>

<artifactId>realtime-common</artifactId>

<version>1.0-SNAPSHOT</version>

</dependency>

</dependencies>3. 代码托管到Gitee

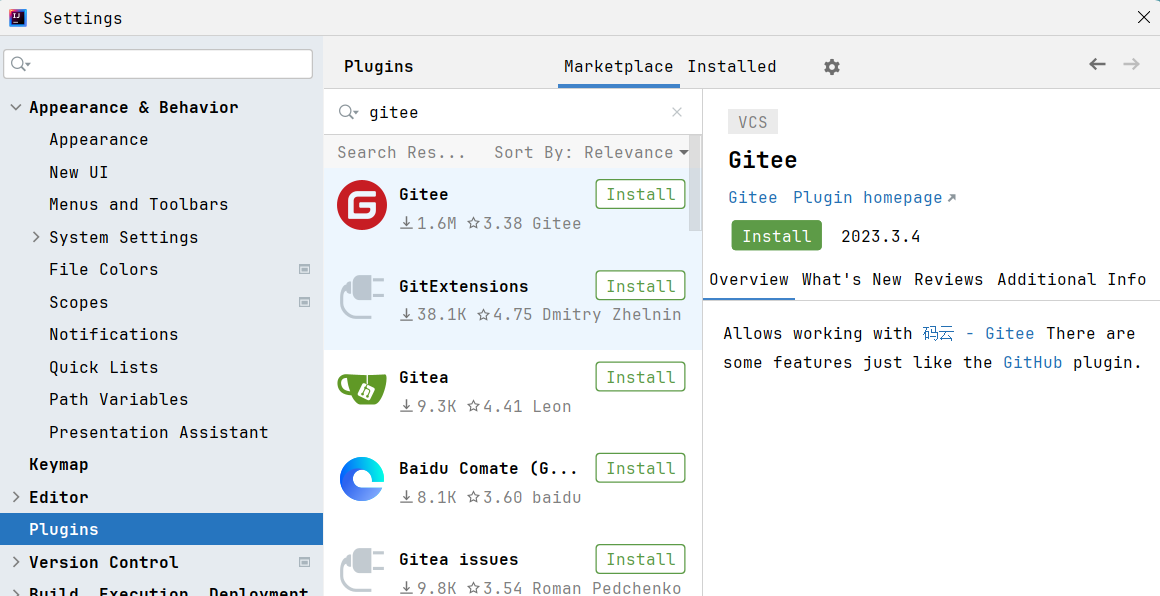

3.1 安装Gitee插件

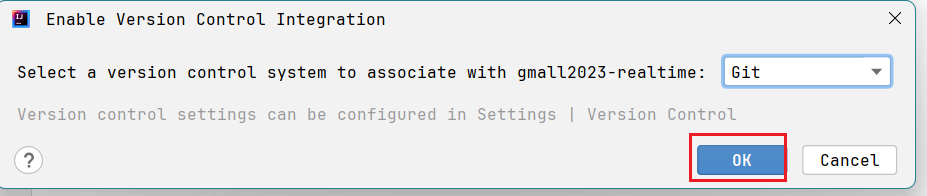

3.2 使用Git进行版本控制

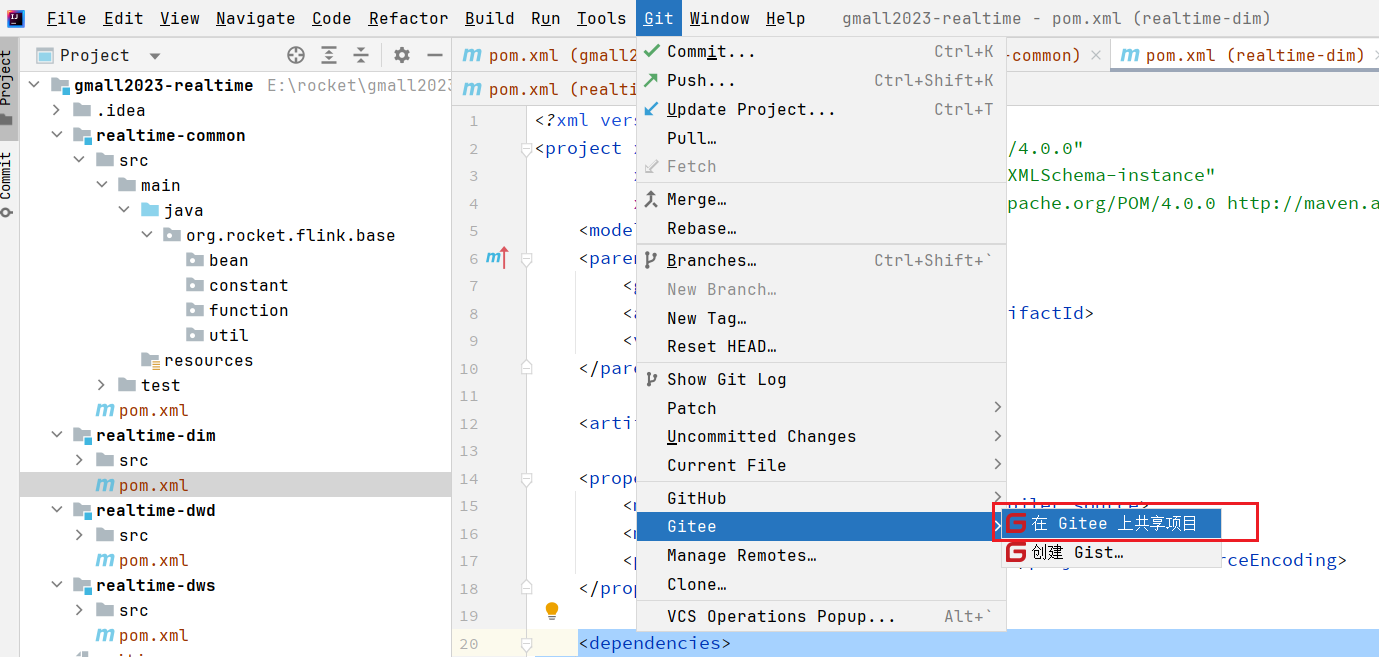

3.3 代码上传到Gitee服务器

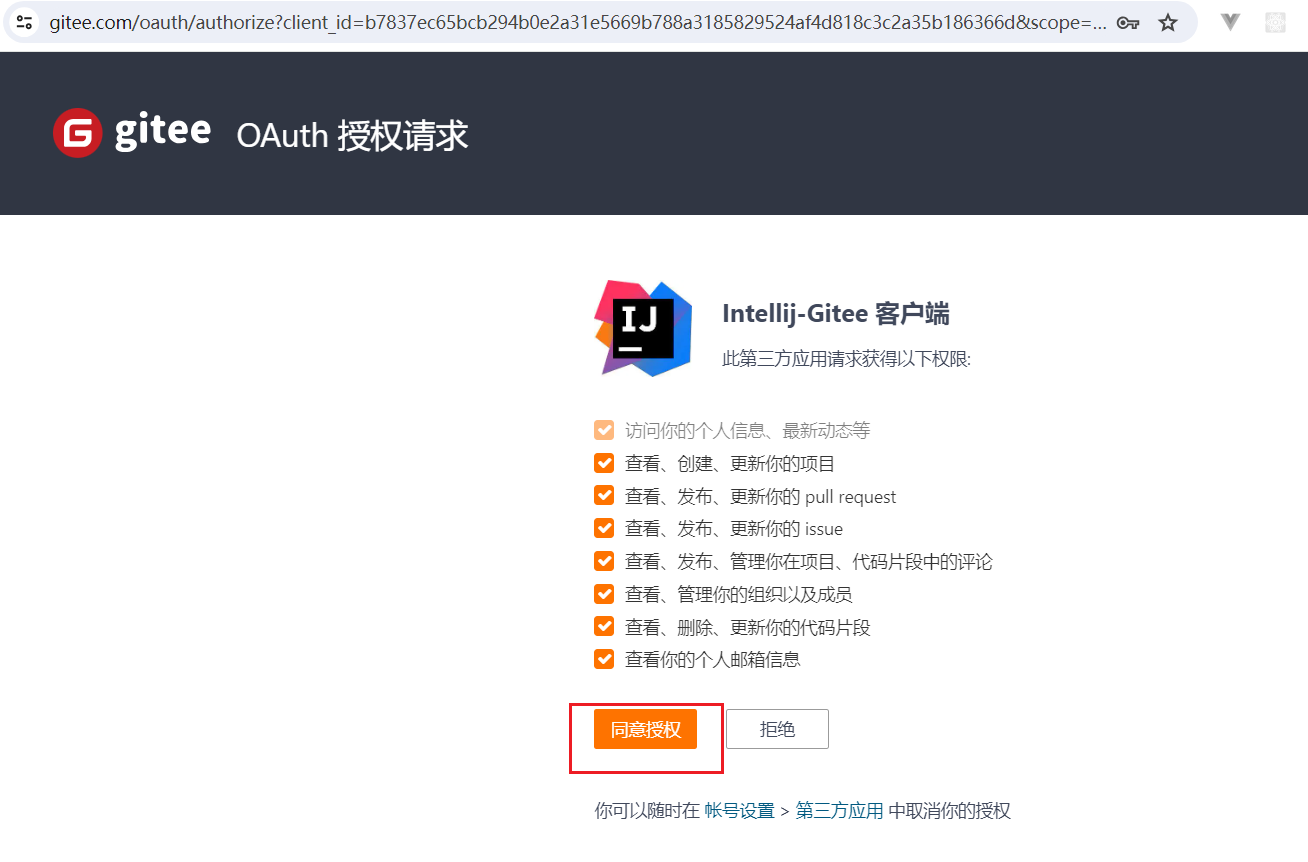

点击登录账号,会跳转到浏览器进行用户登录授权:

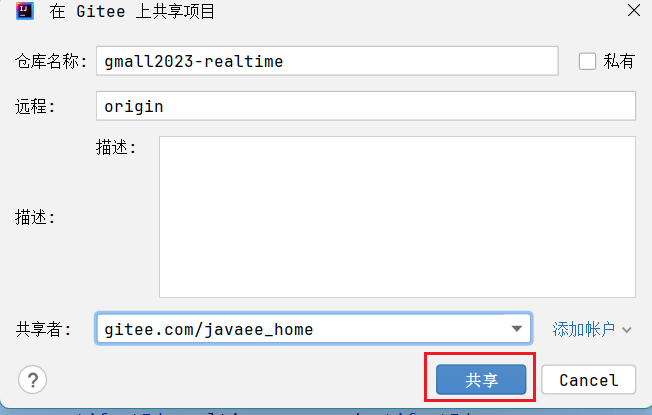

点击登录账号,会跳转到浏览器进行用户登录授权:  点击共享:

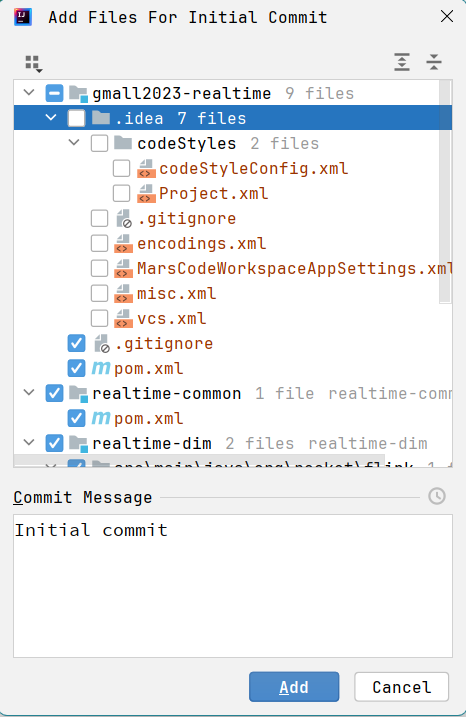

点击共享:  选择上传的文件,点击add:

选择上传的文件,点击add:  上传完毕后,右下角会提示:

上传完毕后,右下角会提示:

4. 数仓组件搭建

4.1 MySQL环境搭建

请参考MySQL安装

4.2 Kafka环境搭建

请参考Kafka集群部署

4.3 Maxwell环境搭建

请参考Maxwell部署

4.4 Flink环境搭建

请参考flink集群部署

4.5 Hbase环境搭建

请参考Hbase安装

4.6 Redis环境搭建

请参考Redis单机版安装

4.7 Doris环境搭建

请参考Doris集群部署