canal高可用搭建

搭建zk集群+canal-server集群+canal-admin+canal-adapter实现数据同步的高可用。

1. 集群搭建规划

实现canal数据同步HA高可用,同时便捷管理集群,需要zk和canal-admin, 集群规划如下:

| 机器名 | canal-server | zk集群 | canal-admin | canal-adapter |

|---|---|---|---|---|

| 192.168.101.102 | ✓ | ✓ | ||

| 192.168.101.103 | ✓ | |||

| 192.168.101.104 | ✓ | |||

| 192.168.101.105 | ✓ | ✓ | ||

| 192.168.101.106 | ✓ | |||

| 192.168.101.107 | ✓ |

2. 搭建zk集群

- 安装zk集群

请参考Zookeeper 集群安装笔记 - 启动zk集群

[jack@hadoop105 ~]$ zk_helper start

---------- zookeeper hadoop105 启动 ------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper-3.8.4/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

---------- zookeeper hadoop106 启动 ------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper-3.8.4/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

---------- zookeeper hadoop107 启动 ------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper-3.8.4/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[jack@hadoop105 ~]$ zk_helper status

---------- zookeeper hadoop105 状态 ------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper-3.8.4/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

---------- zookeeper hadoop106 状态 ------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper-3.8.4/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: leader

---------- zookeeper hadoop107 状态 ------------

ZooKeeper JMX enabled by default

Using config: /opt/module/zookeeper-3.8.4/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower3. 部署canal-admin

3.1 安装并启动canal-admin

部署启动canal-admin可以参考canal-admin的使用笔记,前1~4个小节进行操作,特别说明的是创建的canal-admin数据库使用名称改为canal_manager2,和之前的章节做区分。

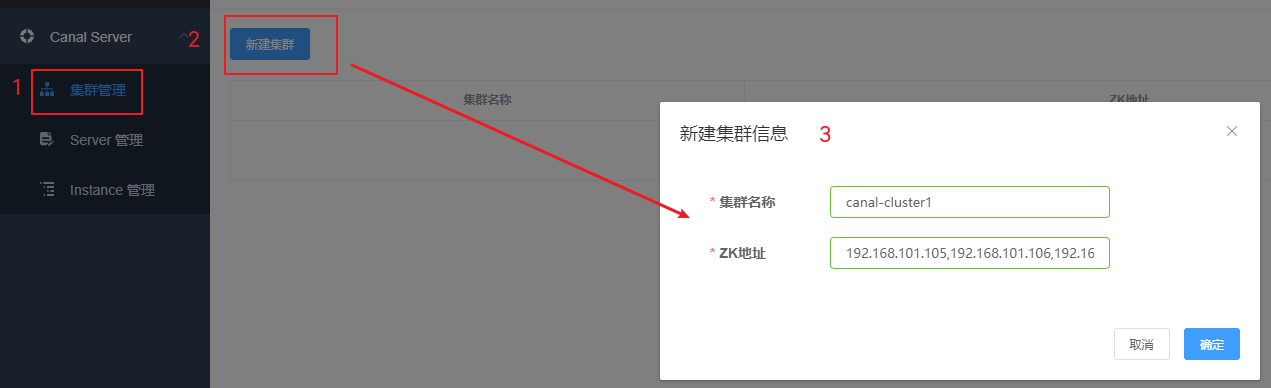

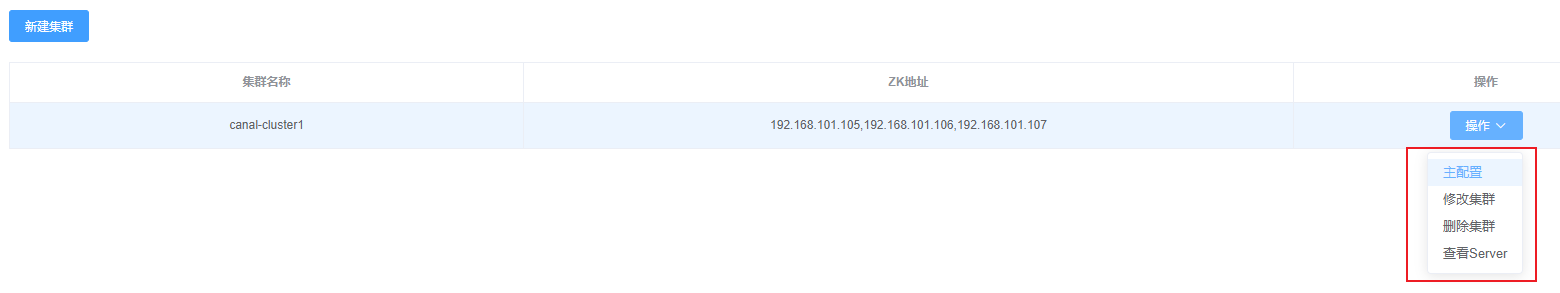

3.2 配置zk

点击集群管理==>新建集群: 如图所示,配置了一个名叫canal-cluster1的zk集群:

如图所示,配置了一个名叫canal-cluster1的zk集群:

3.3 设置主配置

点击canal-cluster1数据的操作==>选择主配置: 点击载入模版,修改为如下配置:

点击载入模版,修改为如下配置:

配置明细

#################################################

######### common argument #############

#################################################

canal.port = 11111

canal.metrics.pull.port = 11112

# canal instance user/passwd

# canal.user = canal

# canal.passwd = E3619321C1A937C46A0D8BD1DAC39F93B27D4458

# canal-admin的地址

canal.admin.manager = 192.168.101.102:8089

canal.admin.port = 11110

canal.admin.user = admin

canal.admin.passwd = 4ACFE3202A5FF5CF467898FC58AAB1D615029441

# admin auto register

canal.admin.register.auto = true

canal.admin.register.cluster =canal-cluster1

#canal.admin.register.name =

#Zookeeper 地址

canal.zkServers =192.168.101.105:2181,192.168.101.106:2181,192.168.101.107:2181

# flush data to zk

canal.zookeeper.flush.period = 1000

canal.withoutNetty = false

# tcp, kafka, rocketMQ, rabbitMQ, pulsarMQ

canal.serverMode = tcp

# flush meta cursor/parse position to file

canal.file.data.dir = ${canal.conf.dir}

canal.file.flush.period = 1000

## memory store RingBuffer size, should be Math.pow(2,n)

canal.instance.memory.buffer.size = 16384

## memory store RingBuffer used memory unit size , default 1kb

canal.instance.memory.buffer.memunit = 1024

## meory store gets mode used MEMSIZE or ITEMSIZE

canal.instance.memory.batch.mode = MEMSIZE

canal.instance.memory.rawEntry = true

## detecing config

canal.instance.detecting.enable = false

#canal.instance.detecting.sql = insert into retl.xdual values(1,now()) on duplicate key update x=now()

canal.instance.detecting.sql = select 1

canal.instance.detecting.interval.time = 3

canal.instance.detecting.retry.threshold = 3

canal.instance.detecting.heartbeatHaEnable = false

# support maximum transaction size, more than the size of the transaction will be cut into multiple transactions delivery

canal.instance.transaction.size = 1024

# mysql fallback connected to new master should fallback times

canal.instance.fallbackIntervalInSeconds = 60

# network config

canal.instance.network.receiveBufferSize = 16384

canal.instance.network.sendBufferSize = 16384

canal.instance.network.soTimeout = 30

# binlog filter config

canal.instance.filter.druid.ddl = true

canal.instance.filter.query.dcl = false

canal.instance.filter.query.dml = false

canal.instance.filter.query.ddl = false

canal.instance.filter.table.error = false

canal.instance.filter.rows = false

canal.instance.filter.transaction.entry = false

canal.instance.filter.dml.insert = false

canal.instance.filter.dml.update = false

canal.instance.filter.dml.delete = false

# binlog format/image check

canal.instance.binlog.format = ROW,STATEMENT,MIXED

canal.instance.binlog.image = FULL,MINIMAL,NOBLOB

# binlog ddl isolation

canal.instance.get.ddl.isolation = false

# parallel parser config

canal.instance.parser.parallel = true

## concurrent thread number, default 60% available processors, suggest not to exceed Runtime.getRuntime().availableProcessors()

#canal.instance.parser.parallelThreadSize = 16

## disruptor ringbuffer size, must be power of 2

canal.instance.parser.parallelBufferSize = 256

# tsdb数据库保存同步数据库表的元数据信息

canal.instance.tsdb.enable = true

# 不能和canal.instance.tsdb.url并存canal.instance.tsdb.dir= ${canal.file.data.dir:../conf}/${canal.instance.destination:}

canal.instance.tsdb.url = jdbc:mysql://192.168.101.102:3306/canal_tsdb?useSSL=false&useUnicode=true

canal.instance.tsdb.dbUsername = root

canal.instance.tsdb.dbPassword = 123456

# dump snapshot interval, default 24 hour

canal.instance.tsdb.snapshot.interval = 24

# purge snapshot expire , default 360 hour(15 days)

canal.instance.tsdb.snapshot.expire = 360

#################################################

######### destinations #############

#################################################

canal.destinations =

# conf root dir

canal.conf.dir = ../conf

# auto scan instance dir add/remove and start/stop instance

canal.auto.scan = true

canal.auto.scan.interval = 5

# set this value to 'true' means that when binlog pos not found, skip to latest.

# WARN: pls keep 'false' in production env, or if you know what you want.

canal.auto.reset.latest.pos.mode = false

#此配置需要修改 使用MySQL存储表结构变更信息 mysql-tsdb.xmlcanal.instance.tsdb.spring.xml = classpath:spring/tsdb/h2-tsdb.xml

canal.instance.tsdb.spring.xml = classpath:spring/tsdb/mysql-tsdb.xml

canal.instance.global.mode = manager

canal.instance.global.lazy = false

canal.instance.global.manager.address = ${canal.admin.manager}

# 此配置需要修改成 default-instance#canal.instance.global.spring.xml = classpath:spring/memory-instance.xml

#canal.instance.global.spring.xml = classpath:spring/file-instance.xml

canal.instance.global.spring.xml = classpath:spring/default-instance.xml点击保存按钮,完成主配置信息配置。

重要参数说明:

canal.instance.tsdb.xxx配置项: 默认情况下把源库对表结构和数据修改的记录存储在本地H2数据库中,一旦其中作为主的canal-server宕机了,h2数据库文件的数据就不能被新的主canal-server读取到,影响到整个canal-server集群的正常工作,因此需要修改TSDB的设置存储到外部MySQL数据库作为canal-server存储表结构变更信息的库。canal.instance.global.spring.xml: 全局的spring配置方式的组件文件路径,连接zk集群需要改为使用default-instance.xml,默认是file-instance.xml也就是存储在本地h2文件系统中。

3.4 创建tsdb数据库

mysql客户端连接192.168.101.102数据库,执行conf/spring/tsdb/sql/create_table.sql脚本:

mysql> CREATE DATABASE `canal_tsdb` /*!40100 DEFAULT CHARACTER SET utf8 */;

mysql> source /opt/module/canal-deployer/conf/spring/tsdb/sql/create_table.sql;4. 部署canal-server集群

集群模式下,虽然有多个canal-server,但是只有一个是处于running状态,客户端连接的时候会查询Zookeeper节点获取并连接处于running状态的 canal-server。

4.1 分发102机器上面的canal-deployer到103、104上

[jack@hadoop102 module]$ xsync canal-deployer4.2 修改配置和清理数据

- canal-server需要配置canal-admin的连接信息即可,配置如下:

[jack@hadoop103 canal-deployer]$ cd canal-deployer

[jack@hadoop103 canal-deployer]$ vi conf/canal.properties

# 由于本地有docker虚拟网卡干扰,手动指定本地ip

canal.ip =192.168.101.103

# 注册ip到zookeeper信息,使得被其他canal-server节点感知到

canal.register.ip =192.168.101.103

# canal-admin的地址

canal.admin.manager = 192.168.101.102:8089

canal.admin.port = 11110

canal.admin.user = admin

canal.admin.passwd = 4ACFE3202A5FF5CF467898FC58AAB1D615029441

# admin auto register

canal.admin.register.auto = true

canal.admin.register.cluster =canal-cluster1

canal.admin.register.name =canal-server01

canal.destinations=

canal.auto.scan=true在103和104机器上分别调整canal.properties中的canal.ip、canal.register.ip、canal.admin.register.name参数配置项。 2. 清理垃圾数据

[jack@hadoop103 canal-deployer]$ rm -rf conf/demo/ conf/example/ logs/canal/ logs/example/ logs/demo/4.3 启动canal-server

[jack@hadoop103 canal-deployer]$ sh bin/restart.sh

[jack@hadoop104 canal-deployer]$ sh bin/restart.sh在admin的server管理页面上可以看到注册名为canal-server01、canal-server02的canal-server: 查看canal-server01、canal-server02的服务器日志,至此server集群启动完成:

查看canal-server01、canal-server02的服务器日志,至此server集群启动完成: 连接zk集群可以看到已经注册信息:

连接zk集群可以看到已经注册信息:

[jack@hadoop105 zookeeper-3.8.4]$ sh bin/zkCli.sh

[zk: localhost:2181(CONNECTED) 5] ls /otter/canal/cluster

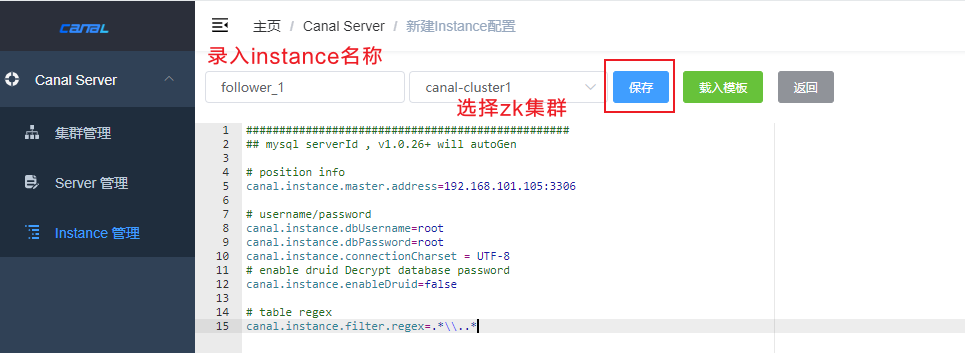

[192.168.101.103:11111, 192.168.101.104:11111]4.4 配置instance实例

- 点击新建instance

如下图所示,创建名为follower_1的instance实例

如下图所示,创建名为follower_1的instance实例

- follower_1实例配置如下,主要设置连接源数据库连接信息:

#################################################

## mysql serverId , v1.0.26+ will autoGen

# position info

canal.instance.master.address=192.168.101.105:3306

# username/password

canal.instance.dbUsername=root

canal.instance.dbPassword=root

canal.instance.connectionCharset = UTF-8

# enable druid Decrypt database password

canal.instance.enableDruid=false

# table regex

canal.instance.filter.regex=.*\\..*- 如图所示instance是在

canal-server02上面启动,也就是目前canal-server中工作的是canal-server02。

- 查看instance启动日志

- 也可以查看zk上目前活动的canal-server的IP地址:

[zk: localhost:2181(CONNECTED) 2] get /otter/canal/destinations/follower_1/running

{"active":true,"address":"192.168.101.104:11111"}5. 部署canal-adapter

通过canal-adapter订阅follower_1实例上面的tcp消息,实现将105上面test.user表实时同步到102的mytest.t_user表中。 将canal-adapter压缩包上传到102、105机器上,

[jack@hadoop102 software]$ mkdir /opt/module/canal-adapter

[jack@hadoop102 software]$ tar -xvf canal.adapter-1.1.7.tar.gz -C /opt/module/canal-adapter5.1 配置canal-adapter

修改102、105上面的canal-adapter配置文件application.yml和rdb/mytest_user.yml文件

[jack@hadoop105 conf]$ pwd

/opt/module/canal-adapter/conf

[jack@hadoop105 conf]$ ll

总用量 8

-rw-r--r--. 1 jack wheel 1272 5月 20 06:45 application.yml

-rwxr-xr-x. 1 jack wheel 3106 10月 9 2023 logback.xml

drwxr-xr-x. 2 jack wheel 30 5月 15 07:46 META-INF

drwxr-xr-x. 2 jack wheel 29 5月 20 06:46 rdbcanal-server目前为集群模式,不能直连,需要调整application.yml文件的配置项为连接zk集群,mytest_user.yml中配置具体需要同步的字段:

server:

port: 8081

spring:

jackson:

date-format: yyyy-MM-dd HH:mm:ss

time-zone: GMT+8

default-property-inclusion: non_null

canal.conf:

mode: tcp #tcp kafka rocketMQ rabbitMQ

flatMessage: true

zookeeperHosts: 192.168.101.105,192.168.101.106,192.168.101.107

syncBatchSize: 1000

retries: -1

timeout:

accessKey:

secretKey:

consumerProperties:

# 不能直连canal-server

#canal.tcp.server.host: 127.0.0.1:11111

canal.tcp.zookeeper.hosts: 192.168.101.105,192.168.101.106,192.168.101.107

canal.tcp.batch.size: 500

canal.tcp.username:

canal.tcp.password:

srcDataSources:

defaultDS:

url: jdbc:mysql://192.168.101.105:3306/test?useUnicode=true&useSSL=false

username: root

password: root

canalAdapters:

- instance: follower_1 # canal instance Name or mq topic name

groups:

- groupId: g1

outerAdapters:

- name: logger

- name: rdb

key: mysql1

properties:

jdbc.driverClassName: com.mysql.jdbc.Driver

jdbc.url: jdbc:mysql://192.168.101.102:3306/mytest?useUnicode=true&useSSL=false

jdbc.username: root

jdbc.password: 123456

druid.stat.enable: false

druid.stat.slowSqlMillis: 1000dataSourceKey: defaultDS

destination: follower_1

groupId: g1

outerAdapterKey: mysql1

concurrent: true

dbMapping:

database: test

table: user

targetTable: t_user

targetPk:

id: id

# mapAll: true

targetColumns: # 是否整表映射, 要求源表和目标表字段名一模一样

id: id

name2: name

createtime: createtime

desc_info: desc_info

wallet: wallet

is_live: is_live

id_card: id_card

sex2: sex

# etlCondition: "where c_time>={}"

commitBatch: 300 # 批量提交的大小canal-adapter根据groupId实现高可用,两个相同groupId的canal-adapter中只有一个机器会工作,另一个机器作为备用,处于待机状态。

5.2 启动canal-adapter

分别启动102和105上面的canal-adapter:

[jack@hadoop102 canal-adapter]$ sh bin/restart.sh

[jack@hadoop105 canal-adapter]$ sh bin/restart.sh查看启动日志:

2024-05-20 06:56:50.360 [main] INFO c.a.otter.canal.adapter.launcher.CanalAdapterApplication - No active profile set, falling back to default profiles: default

2024-05-20 06:56:52.424 [main] INFO org.springframework.cloud.context.scope.GenericScope - BeanFactory id=bedb0f3d-844f-3b5d-89ae-6c0276122cf0

2024-05-20 06:56:53.449 [main] INFO o.s.boot.web.embedded.tomcat.TomcatWebServer - Tomcat initialized with port(s): 8081 (http)

2024-05-20 06:56:53.483 [main] INFO org.apache.coyote.http11.Http11NioProtocol - Initializing ProtocolHandler ["http-nio-8081"]

2024-05-20 06:56:53.483 [main] INFO org.apache.catalina.core.StandardService - Starting service [Tomcat]

2024-05-20 06:56:53.484 [main] INFO org.apache.catalina.core.StandardEngine - Starting Servlet engine: [Apache Tomcat/9.0.52]

2024-05-20 06:56:53.778 [main] INFO o.a.catalina.core.ContainerBase.[Tomcat].[localhost].[/] - Initializing Spring embedded WebApplicationContext

2024-05-20 06:56:53.778 [main] INFO o.s.b.w.s.context.ServletWebServerApplicationContext - Root WebApplicationContext: initialization completed in 3262 ms

2024-05-20 06:56:56.385 [main] INFO com.alibaba.druid.pool.DruidDataSource - {dataSource-1} inited

2024-05-20 06:56:56.602 [main] INFO org.apache.curator.framework.imps.CuratorFrameworkImpl - Starting

2024-05-20 06:56:56.624 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:zookeeper.version=3.5.6-c11b7e26bc554b8523dc929761dd28808913f091, built on 10/08/2019 20:18 GMT

2024-05-20 06:56:56.625 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:host.name=hadoop105

2024-05-20 06:56:56.625 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:java.version=1.8.0_391

......

2024-05-20 06:56:58.637 [ZkClient-EventThread-32-192.168.101.105,192.168.101.106,192.168.101.107] INFO org.I0Itec.zkclient.ZkEventThread - Starting ZkClient event thread.

2024-05-20 06:56:58.648 [main-SendThread(192.168.101.107:2181)] INFO org.apache.zookeeper.ClientCnxn - Opening socket connection to server hadoop107/192.168.101.107:2181. Will not attempt to authenticate using SASL (unknown error)

2024-05-20 06:56:58.660 [main-SendThread(192.168.101.107:2181)] INFO org.apache.zookeeper.ClientCnxn - Socket connection established, initiating session, client: /192.168.101.105:58308, server: hadoop107/192.168.101.107:2181

2024-05-20 06:56:58.661 [main] INFO org.I0Itec.zkclient.ZkClient - Waiting for keeper state SyncConnected

2024-05-20 06:56:58.673 [main-SendThread(192.168.101.107:2181)] INFO org.apache.zookeeper.ClientCnxn - Session establishment complete on server hadoop107/192.168.101.107:2181, sessionid = 0x30007c04b0a001e, negotiated timeout = 40000

2024-05-20 06:56:58.674 [main-EventThread] INFO org.I0Itec.zkclient.ZkClient - zookeeper state changed (SyncConnected)

2024-05-20 06:56:59.237 [main] INFO c.a.o.canal.adapter.launcher.loader.CanalAdapterLoader - Start adapter for canal-client mq topic: follower_1-g1 succeed

2024-05-20 06:56:59.237 [main] INFO c.a.o.canal.adapter.launcher.loader.CanalAdapterService - ## the canal client adapters are running now ......

2024-05-20 06:56:59.251 [Thread-4] INFO c.a.otter.canal.adapter.launcher.loader.AdapterProcessor - =============> Start to connect destination: follower_1 <=============

2024-05-20 06:56:59.278 [main] INFO c.a.otter.canal.adapter.launcher.CanalAdapterApplication - Started CanalAdapterApplication in 11.005 seconds (JVM running for 12.106)

2024-05-20 07:21:20.780 [Thread-2] INFO c.a.o.canal.client.adapter.rdb.monitor.RdbConfigMonitor - Change a rdb mapping config: mytest_user.yml of canal adapter

2024-05-20 07:21:21.106 [pool-10-thread-1] INFO c.a.o.canal.client.adapter.logger.LoggerAdapterExample - DML: {"data":null,"database":"test","destination":"follower_1","es":1716158551000,"groupId":"g1","isDdl":true,"old":null,"pkNames":null,"sql":"/* ApplicationName=DBeaver 24.0.1 - Main */ RENAME TABLE test.t_user TO test.`user`","table":"user","ts":1716160881105,"type":"RENAME"}

2024-05-20 07:21:21.106 [pool-10-thread-1] INFO c.a.o.canal.client.adapter.logger.LoggerAdapterExample - DML: {"data":[{"id":10,"name":"korea","createtime":1716162972000,"desc_info":"demo_test","wallet":202.22,"is_live":0,"id_card":"51018220240501006","sex":1}],"database":"test","destination":"follower_1","es":1716160300000,"groupId":"g1","isDdl":false,"old":null,"pkNames":["id"],"sql":"","table":"user","ts":1716160881105,"type":"INSERT"}

2024-05-20 07:21:21.136 [pool-5-thread-1] DEBUG c.a.o.canal.client.adapter.rdb.service.RdbSyncService - DML: {"data":{"id":10,"name":"korea","createtime":1716162972000,"desc_info":"demo_test","wallet":202.22,"is_live":0,"id_card":"51018220240501006","sex":1},"database":"test","destination":"follower_1","old":null,"table":"user","type":"INSERT"}2024-05-20 07:25:46.736 [main] INFO c.a.otter.canal.adapter.launcher.CanalAdapterApplication - No active profile set, falling back to default profiles: default

2024-05-20 07:25:48.138 [main] INFO org.springframework.cloud.context.scope.GenericScope - BeanFactory id=bedb0f3d-844f-3b5d-89ae-6c0276122cf0

2024-05-20 07:25:48.842 [main] INFO o.s.boot.web.embedded.tomcat.TomcatWebServer - Tomcat initialized with port(s): 8081 (http)

2024-05-20 07:25:48.866 [main] INFO org.apache.coyote.http11.Http11NioProtocol - Initializing ProtocolHandler ["http-nio-8081"]

2024-05-20 07:25:48.866 [main] INFO org.apache.catalina.core.StandardService - Starting service [Tomcat]

2024-05-20 07:25:48.867 [main] INFO org.apache.catalina.core.StandardEngine - Starting Servlet engine: [Apache Tomcat/9.0.52]

2024-05-20 07:25:49.058 [main] INFO o.a.catalina.core.ContainerBase.[Tomcat].[localhost].[/] - Initializing Spring embedded WebApplicationContext

2024-05-20 07:25:49.059 [main] INFO o.s.b.w.s.context.ServletWebServerApplicationContext - Root WebApplicationContext: initialization completed in 2277 ms

2024-05-20 07:25:50.840 [main] INFO com.alibaba.druid.pool.DruidDataSource - {dataSource-1} inited

2024-05-20 07:25:51.054 [main] INFO org.apache.curator.framework.imps.CuratorFrameworkImpl - Starting

2024-05-20 07:25:51.087 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:zookeeper.version=3.5.6-c11b7e26bc554b8523dc929761dd28808913f091, built on 10/08/2019 20:18 GMT

2024-05-20 07:25:51.088 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:host.name=hadoop105

2024-05-20 07:25:51.088 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:java.version=1.8.0_391

2024-05-20 07:25:51.088 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:java.vendor=Oracle Corporation

2024-05-20 07:25:51.088 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:java.home=/opt/module/jdk1.8.0_391/jre

2024-05-20 07:25:51.088 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:java.class.path=.:/opt/module/canal-adapter/bin/../conf:/opt/module/canal-adapter/bin/

......

2024-05-20 07:25:52.408 [main] INFO o.s.boot.web.embedded.tomcat.TomcatWebServer - Tomcat started on port(s): 8081 (http) with context path ''

2024-05-20 07:25:52.414 [main] INFO c.a.o.canal.adapter.launcher.loader.CanalAdapterService - ## syncSwitch refreshed.

2024-05-20 07:25:52.414 [main] INFO c.a.o.canal.adapter.launcher.loader.CanalAdapterService - ## start the canal client adapters.

2024-05-20 07:25:52.422 [main] INFO c.a.otter.canal.client.adapter.support.ExtensionLoader - extension classpath dir: /opt/module/canal-adapter/plugin

2024-05-20 07:25:52.535 [main] INFO c.a.o.canal.adapter.launcher.loader.CanalAdapterLoader - Load canal adapter: logger succeed

2024-05-20 07:25:52.539 [main] INFO c.a.otter.canal.client.adapter.rdb.config.ConfigLoader - ## Start loading rdb mapping config ...

2024-05-20 07:25:52.595 [main] INFO c.a.otter.canal.client.adapter.rdb.config.ConfigLoader - ## Rdb mapping config loaded

2024-05-20 07:25:52.654 [main] INFO com.alibaba.druid.pool.DruidDataSource - {dataSource-2} inited

2024-05-20 07:25:52.671 [main] INFO c.a.o.canal.adapter.launcher.loader.CanalAdapterLoader - Load canal adapter: rdb succeed

2024-05-20 07:25:52.680 [main] INFO c.alibaba.otter.canal.connector.core.spi.ExtensionLoader - extension classpath dir: /opt/module/canal-adapter/plugin

2024-05-20 07:25:52.784 [main] INFO org.apache.zookeeper.ZooKeeper - Initiating client connection, connectString=192.168.101.105,192.168.101.106,192.168.101.107 sessionTimeout=90000 watcher=com.alibaba.otter.canal.common.zookeeper.ZkClientx@1fbf088b

2024-05-20 07:25:52.785 [main] INFO org.apache.zookeeper.ClientCnxnSocket - jute.maxbuffer value is 4194304 Bytes

2024-05-20 07:25:52.785 [main] INFO org.apache.zookeeper.ClientCnxn - zookeeper.request.timeout value is 0. feature enabled=

2024-05-20 07:25:52.786 [ZkClient-EventThread-30-192.168.101.105,192.168.101.106,192.168.101.107] INFO org.I0Itec.zkclient.ZkEventThread - Starting ZkClient event thread.

2024-05-20 07:25:52.798 [main-SendThread(192.168.101.106:2181)] INFO org.apache.zookeeper.ClientCnxn - Opening socket connection to server hadoop106/192.168.101.106:2181. Will not attempt to authenticate using SASL (unknown error)

2024-05-20 07:25:52.799 [main-SendThread(192.168.101.106:2181)] INFO org.apache.zookeeper.ClientCnxn - Socket connection established, initiating session, client: /192.168.101.105:44406, server: hadoop106/192.168.101.106:2181

2024-05-20 07:25:52.813 [main-SendThread(192.168.101.106:2181)] INFO org.apache.zookeeper.ClientCnxn - Session establishment complete on server hadoop106/192.168.101.106:2181, sessionid = 0x20007c3a21b0017, negotiated timeout = 40000

2024-05-20 07:25:52.814 [main] INFO org.I0Itec.zkclient.ZkClient - Waiting for keeper state SyncConnected

2024-05-20 07:25:52.815 [main-EventThread] INFO org.I0Itec.zkclient.ZkClient - zookeeper state changed (SyncConnected)

2024-05-20 07:25:53.229 [main] INFO c.a.o.canal.adapter.launcher.loader.CanalAdapterLoader - Start adapter for canal-client mq topic: follower_1-g1 succeed

2024-05-20 07:25:53.230 [main] INFO c.a.o.canal.adapter.launcher.loader.CanalAdapterService - ## the canal client adapters are running now ......

2024-05-20 07:25:53.242 [Thread-4] INFO c.a.otter.canal.adapter.launcher.loader.AdapterProcessor - =============> Start to connect destination: follower_1 <=============

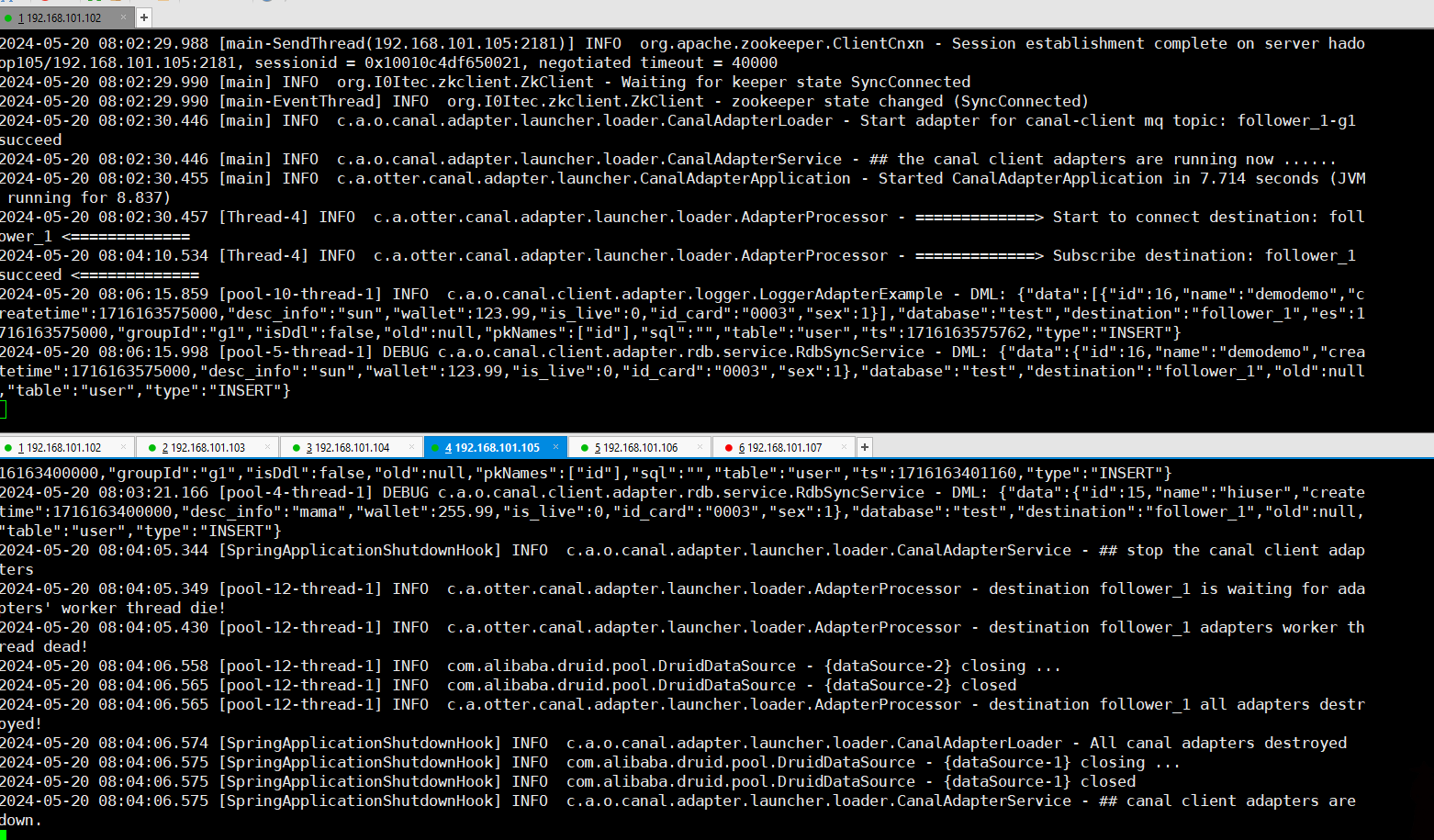

2024-05-20 07:25:53.290 [main] INFO c.a.otter.canal.adapter.launcher.CanalAdapterApplication - Started CanalAdapterApplication in 8.146 seconds (JVM running for 9.228)通过日志观察可以看到实际工作的是102的canal-adapter。

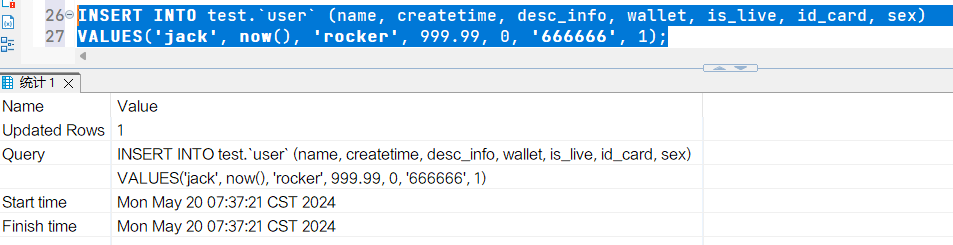

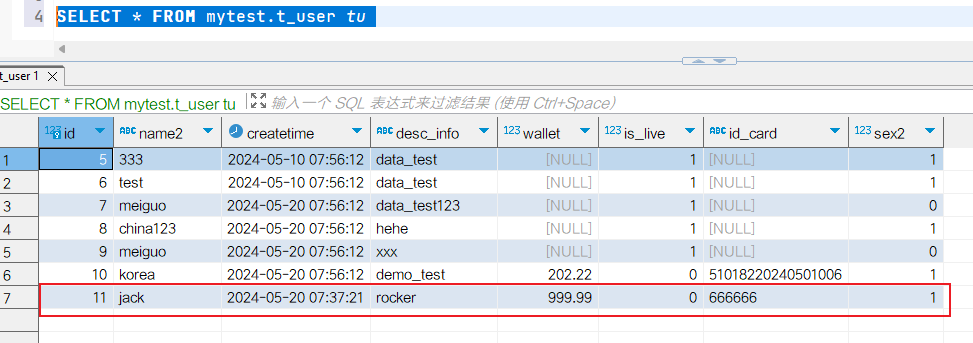

5.3 测试canal-adapter

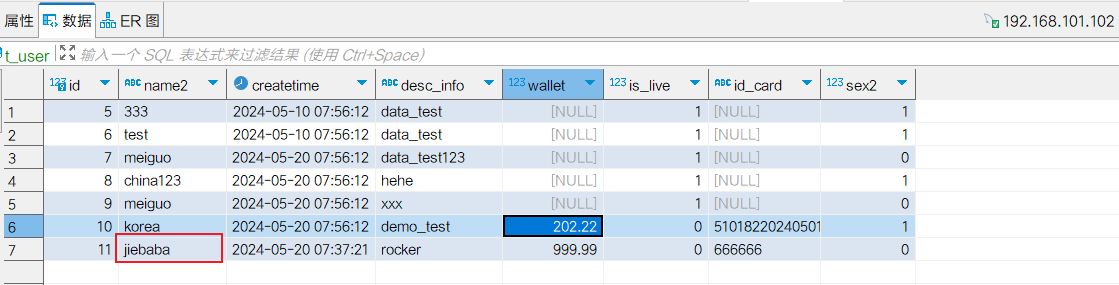

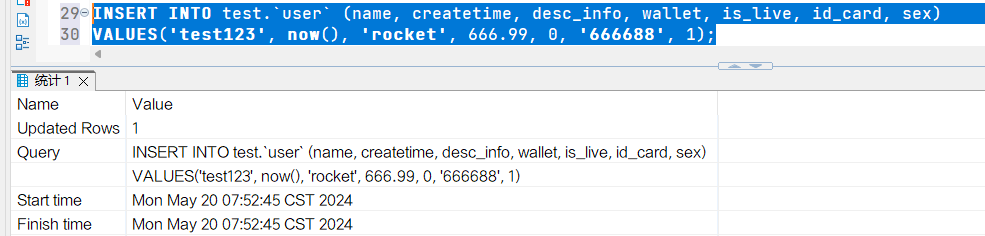

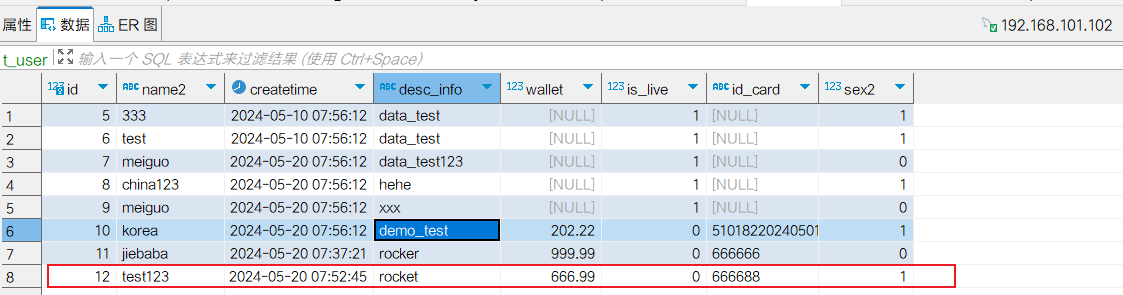

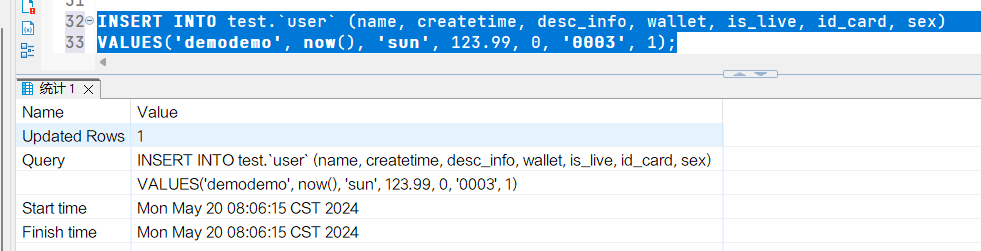

- 在105机器上插入一条数据

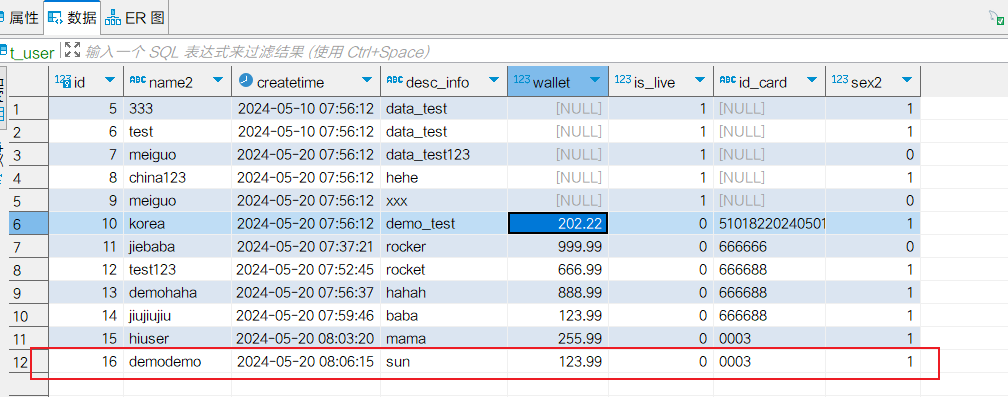

- 在102上查询,发现数据自动同步了

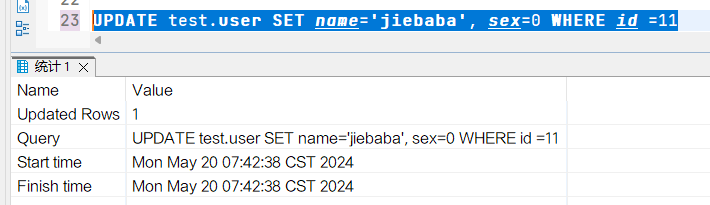

- 将id为11的数据name更新为jiebaba

- 在102上查询,发现数据被更新

6. 测试集群高可用

6.1 canal-server故障

- 停掉192.168.101.104上的canal-server服务

[jack@hadoop104 canal-deployer]$ sh bin/stop.sh

hadoop104: stopping canal 7638 ...

Oook! cost:1查看canal-admin页面,可以发现104已经断开连接: 2. 通过Zookeeper协调服务,备canal-server转为running状态提供服务。查看目前活动的canl-server

2. 通过Zookeeper协调服务,备canal-server转为running状态提供服务。查看目前活动的canl-server

[zk: localhost:2181(CONNECTED) 3] get /otter/canal/destinations/follower_1/running

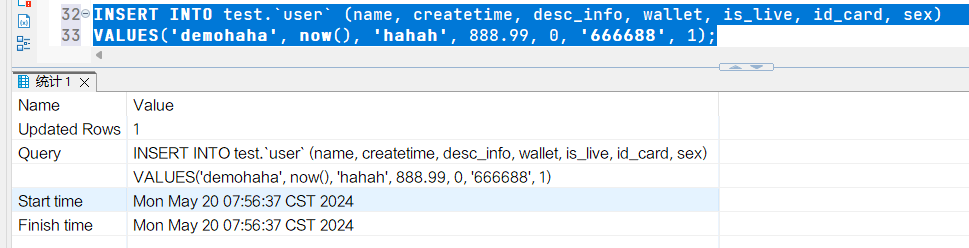

{"active":true,"address":"192.168.101.103:11111"}- 执行数据测试,查看数据同步,发现数据同步依然有效

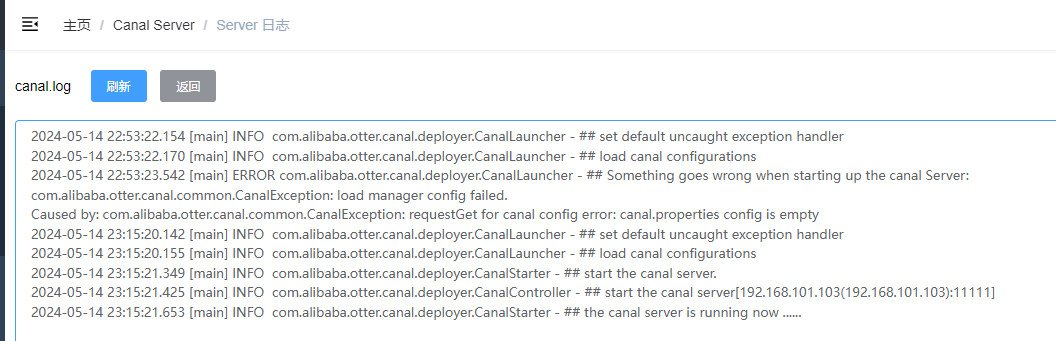

6.2 canal-admin故障

- 停掉102机器上的canal-admin服务

[jack@hadoop102 canal-admin]$ sh bin/stop.sh

hadoop102: stopping canal 91473 ...

Oook! cost:1- 执行数据测试,查看数据同步,发现数据同步依然有效

6.3 canal-adapter故障

- 继续停掉102上面的canal-adapter服务

[jack@hadoop105 bin]$ sh stop.sh

hadoop105: stopping canal 8213 ...

Oook! cost:4通过Zookeeper协调服务,备canal-adapter转为running状态提供服务。

2. 执行数据测试,查看数据同步,发现数据同步依然有效

3. 查看日志,发现102已经停止工作,105接管数据同步:

3. 查看日志,发现102已经停止工作,105接管数据同步: